Today’s data-driven world requires organisations worldwide to effectively manage massive amounts of information. Technologies like Big Data and Distributed Computing are essential for processing, analysing, and drawing meaningful conclusions from massive datasets.

Consider enrolling in a renowned data science course in India if you want the skills and information necessary to succeed in this fast-paced business and are interested in entering the exciting subject of data science.

Let’s explore the exciting world of distributed computing and big data!

Understanding the Challenges of Traditional Data Processing

Volume, Velocity, Variety, and Veracity of Big Data

- Volume: Traditional data includes small to medium-sized datasets, easily manageable with conventional processing methods. In contrast, big data involves vast datasets requiring specialised technologies due to their sheer size.

- Variety: Traditional data is structured and organised in tables, columns, and rows. In contrast, big data can be structured, unstructured, or semi-structured, incorporating various data types like text, images, tvideos, and more.

- Velocity: Traditional data is static and updated periodically. On the other hand, big data is dynamic and updated in real-time or near real-time, requiring efficient and continuous processing.

- Veracity: Veracity in Big Data refers to data accuracy and reliability. Ensuring trustworthy data is crucial for making informed decisions and avoiding erroneous insights.

A career in data science requires proficiency in handling both traditional and big data, employing cutting-edge tools and techniques to extract meaningful insights and support informed decision-making.

Scalability and Performance Issues

In data science training, understanding the challenges of data scalability and performance in traditional systems is vital. Traditional methods need help to handle large data volumes effectively, and their performance deteriorates as data size increases.

Learning modern Big Data technologies and distributed computing frameworks is essential to overcome these challenges.

Cost of Data Storage and Processing

Data storage and processing costs depend on data volume, chosen technology, cloud provider (if used), and data management needs. Cloud solutions offer flexibility with pay-as-you-go models, while traditional on-premises setups may involve upfront expenses.

What is Distributed Computing?

Definition and Concepts

Distributed computing is a model that distributes software components across multiple computers or nodes. Despite their dispersed locations, these components operate cohesively as a unified system to enhance efficiency and performance.

By leveraging distributed computing, performance, resilience, and scalability can be significantly improved. Consequently, it has become a prevalent computing model in the design of databases and applications.

Aspiring data analysts can benefit from data analytics certification courses that delve into this essential topic, equipping them with valuable skills for handling large-scale data processing and analysis in real-world scenarios.

Distributed Systems Architecture

The architectural model in distributed computing refers to the overall system design and structure, organising components for interactions and desired functionalities.

It offers an overview of development, preparation, and operations, crucial for cost-efficient usage and improved scalability.

Critical aspects of the model include client-server, peer-to-peer, layered, and microservices models.

Distributed Data Storage and Processing

As a developer, a distributed data store is where you manage application data, metrics, logs, etc. Examples include MongoDB, AWS S3, and Google Cloud Spanner.

Distributed data stores come as cloud-managed services or self-deployed products. You can even build your own, either from scratch or on existing data stores. Flexibility in data storage and retrieval is essential for developers.

Distributed processing divides complex tasks among multiple machines or nodes for seamless output. It’s widely used in cloud computing, blockchain farms, MMOs, and post-production software for efficient rendering and coordination.

Distributed File Systems (e.g., Hadoop Distributed File System – HDFS)

HDFS ensures reliable storage of massive data sets and high-bandwidth streaming to user applications. Thousands of servers in large clusters handle storage and computation, enabling scalable growth and cost-effectiveness.

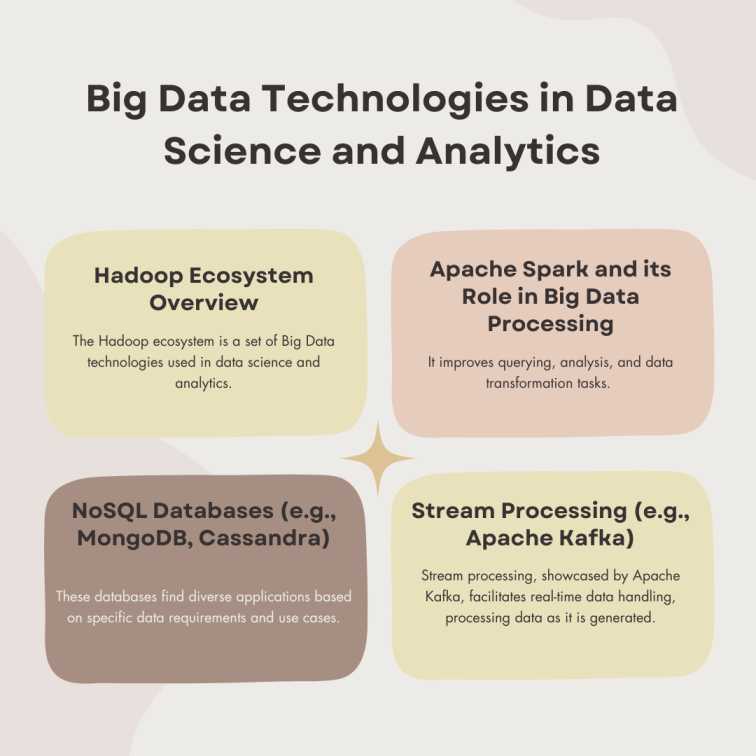

Big Data Technologies in Data Science and Analytics

Hadoop Ecosystem Overview

The Hadoop ecosystem is a set of Big Data technologies used in data science and analytics. It includes components like HDFS for distributed storage, MapReduce and Spark for data processing, Hive and Pig for querying and HBase for real-time access.

Tools like Sqoop, Flume, Kafka, and Oozie enhance data handling and analysis capabilities. Together, they enable scalable and efficient data processing and analysis.

Apache Spark and its Role in Big Data Processing

Apache Spark, a versatile data handling and processing engine, empowers data scientists in various scenarios. It improves querying, analysis, and data transformation tasks.

Spark excels at interactive queries on large datasets, processing streaming data from sensors, and performing machine learning tasks.

Typical Apache Spark use cases in a data science course include:

- Real-time stream processing: Spark enables real-time analysis of data streams, such as identifying fraudulent transactions in financial data.

- Machine learning: Spark’s in-memory data storage facilitates quicker querying, making it ideal for training ML algorithms.

- Interactive analytics: Data scientists can explore data interactively by asking questions, fostering quick and responsive data analysis.

- Data integration: Spark is increasingly used in ETL processes to pull, clean, and standardise data from diverse sources, reducing time and cost.

Aspiring data scientists benefit from learning Apache Spark in data science courses to leverage its powerful capabilities for diverse data-related tasks.

NoSQL Databases (e.g., MongoDB, Cassandra)

MongoDB and Cassandra are NoSQL databases tailored for extensive data storage and processing.

MongoDB’s document-oriented approach allows flexibility with JSON-like documents, while Cassandra’s decentralised nature ensures high availability and scalability.

These databases find diverse applications based on specific data requirements and use cases.

Stream Processing (e.g., Apache Kafka)

Stream processing, showcased by Apache Kafka, facilitates real-time data handling, processing data as it is generated. It empowers real-time analytics, event-driven apps, and immediate responses to streaming data.

With high throughput and fault tolerance, Apache Kafka is a widely used distributed streaming platform for diverse real-time data applications and use cases.

Extract, Transform, Load (ETL) for Big Data

Data Ingestion from Various Sources

Data ingestion involves moving data from various sources, but in real-world scenarios, businesses face challenges with multiple units, diverse applications, file types, and systems.

Data Transformation and Cleansing

Data transformation involves converting data from one format to another, often from the format of the source system to the desired format. It is crucial for various data integration and management tasks, such as wrangling and warehousing.

Methods for data transformation include integration, filtering, scrubbing, discretisation, duplicate removal, attribute construction, and normalisation.

Data cleansing, also called data cleaning, identifies and corrects corrupt, incomplete, improperly formatted, or duplicated data within a dataset.

Data Loading into Distributed Systems

Data loading into distributed systems involves transferring and storing data from various sources in a distributed computing environment. It includes extraction, transformation, partitioning, and data loading for efficient processing and storage on interconnected nodes.

Data Pipelines and Workflow Orchestration

Data pipelines and workflow orchestration involve designing and managing interconnected data processing steps to move data smoothly from source to destination. Workflow orchestration tools schedule and execute these pipelines efficiently, ensuring seamless data flow throughout the entire process.

Big Data Analytics and Insights

Batch Processing vs. Real-Time Processing

| Batch Data Processing | Real-Time Data Processing |

| No specific response time | Predictable Response Time |

| Completion time depends on system speed and data volume | Output provided accurately and timely |

| Collects all data before processing | Simple and efficient procedure |

| Data processing involves multiple stages | Two main processing stages: input to output |

In data analytics courses, real-time data processing is favoured over batch processing for its predictable response time, accurate outputs, and efficient procedure.

MapReduce Paradigm

The MapReduce paradigm processes extensive data sets massively parallelly. It aims to simplify data analysis and transformation, freeing developers to focus on algorithms rather than data management. The model facilitates the straightforward implementation of data-parallel algorithms.

In the MapReduce model, two phases, namely map and reduce, are executed through functions specified by programmers. These functions work with key/value pairs as input and output. Like commercial transactions, keys and values can be simple or complex data types.

Data Analysis with Apache Spark

Data analysis with Apache Spark involves using the distributed computing framework to process large-scale datasets. It includes data ingestion, transformation, and analysis using Spark’s APIs.

Spark’s in-memory processing and parallel computing capabilities make it efficient for various analyses such as machine learning and real-time stream processing.

Data Exploration and Visualisation

Data exploration involves understanding dataset characteristics through summary statistics and visualisations like histograms and scatter plots.

Data visualisation presents data visually using charts and graphs, aiding in data comprehension and effectively communicating insights.

Utilising Big Data for Machine Learning and Predictive Analytics

Big Data enhances machine learning and predictive analytics by providing extensive, diverse datasets for more accurate models and deeper insights.

Large-Scale Data for Model Training

Big Data enables training machine learning models on vast datasets, improving model performance and generalisation.

Scalable Machine Learning Algorithms

Machine learning algorithms for scalability handle Big Data efficiently, allowing faster and parallelised computations.

Real-Time Predictions with Big Data

Big Data technologies enable real-time predictions, allowing immediate responses and decision-making based on streaming data.

Personalisation and Recommendation Systems

Big Data supports personalised user experiences and recommendation systems by analysing vast amounts of data to provide tailored suggestions and content.

Big Data in Natural Language Processing (NLP) and Text Analytics

Big Data enhances NLP and text analytics by handling large volumes of textual data and enabling more comprehensive language processing.

Handling Large Textual Data

Big Data technologies manage large textual datasets efficiently, ensuring scalability and high-performance processing.

Distributed Text Processing Techniques

Distributed computing techniques process text data across multiple nodes, enabling parallel processing and faster analysis.

Sentiment Analysis at Scale

Big Data enables sentiment analysis on vast amounts of text data, providing insights into public opinion and customer feedback.

Topic Modeling and Text Clustering

Big Data facilitates topic modelling and clustering text data, enabling the discovery of hidden patterns and categorising documents based on their content.

Big Data for Time Series Analysis and Forecasting

Big Data plays a crucial role in time series analysis and forecasting by handling vast volumes of time-stamped data. Time series data represents observations recorded over time, such as stock prices, sensor readings, website traffic, and weather data.

Big Data technologies enable efficient storage, processing, and analysis of time series data at scale.

Time Series Data in Distributed Systems

In distributed systems, time series data is stored and managed across multiple nodes or servers rather than centralised on a single machine. This approach efficiently handles large-scale time-stamped data, providing scalability and fault tolerance.

Distributed Time Series Analysis Techniques

Distributed time series analysis techniques involve parallel processing capabilities in distributed systems to analyse time series data concurrently. It allows for faster and more comprehensive analysis of time-stamped data, including tasks like trend detection, seasonality identification, and anomaly detection.

Real-Time Forecasting with Big Data

Big Data technologies enable real-time forecasting by processing streaming time series data as it arrives. It facilitates immediate predictions and insights, allowing businesses to quickly respond to changing trends and make real-time data-driven decisions.

Big Data and Business Intelligence (BI)

Distributed BI Platforms and Tools

Distributed BI platforms and tools are designed to operate on distributed computing infrastructures, enabling efficient processing and analysis of large-scale datasets.

These platforms leverage distributed processing frameworks like Apache Spark to handle big data workloads and support real-time analytics.

Big Data Visualisation

Big Data visualisation focuses on representing large and complex datasets in a visually appealing and understandable manner. Visualisation tools like Tableau, Power BI, and D3.js enable businesses to explore and present insights from massive datasets.

Dashboards and Real-Time Reporting

Dashboards and real-time reporting provide dynamic, interactive data views, allowing users to monitor critical metrics and KPIs in real-time.

Data Security and Privacy in Distributed Systems

Data security and privacy in distributed systems require encryption, access control, data masking, and monitoring. Firewalls, network security, and secure data exchange protocols protect data in transit.

Encryption and Data Protection

Encryption transforms sensitive data into unreadable ciphertext, safeguarding access with decryption keys. This vital layer protects against unauthorised entry, ensuring data confidentiality and integrity during transit and storage.

Role-Based Access Control (RBAC)

RBAC is an access control system that links users to defined roles. Each role has specific permissions, restricting data access and actions based on users’ assigned roles.

Data Anonymisation Techniques

Data anonymisation involves modifying or removing personally identifiable information (PII) from datasets to protect individuals’ privacy. Anonymisation is crucial for ensuring compliance with data protection regulations and safeguarding user privacy.

GDPR Compliance in Big Data Environments

GDPR Compliance in Big Data Environments is crucial to avoid penalties for accidental data disclosure. Businesses must adopt methods to identify privacy threats during data manipulation, ensuring data protection and building trust.

GDPR compliances include:

- Obtaining consent.

- Implementing robust data protection measures.

- Enabling individuals’ rights, such as data access and erasure.

Cloud Computing and Big Data

Cloud computing and Big Data are closely linked, as the cloud offers essential infrastructure and resources for managing vast datasets. With flexibility and cost-effectiveness, cloud platforms excel at handling the demanding needs of Big Data workloads.

Cloud-Based Big Data Solutions

Numerous sectors, such as banking, healthcare, media, entertainment, education, and manufacturing, have achieved impressive outcomes with their big data migration to the cloud.

Cloud-powered big data solutions provide scalability, cost-effectiveness, data agility, flexibility, security, innovation, and resilience, fueling business advancement and achievement.

Cost Benefits of Cloud Infrastructure

Cloud infrastructure offers cost benefits as organisations can pay for resources on demand, allowing them to scale up or down as needed. It eliminates the need for substantial upfront capital expenditures on hardware and data centres.

Cloud Security Considerations

Cloud security is a critical aspect when dealing with sensitive data. Cloud providers implement robust security measures, including data encryption, access controls, and compliance certifications.

Hybrid Cloud Approaches in Data Science and Analytics

Forward-thinking companies adopt a cloud-first approach, prioritising a unified cloud data analytics platform that integrates data lakes, warehouses, and diverse data sources.

Embracing cloud and on-premises solutions in a cohesive ecosystem offers flexibility and maximises data access.

Case Studies and Real-World Applications

Big Data Success Stories in Data Science and Analytics

Netflix: Netflix uses Big Data analytics to analyse user behaviour and preferences, providing recommendations for personalised content. Their recommendation algorithm helps increase user engagement and retention.

Uber: Uber uses Big Data to optimise ride routes, predict demand, and set dynamic pricing. Real-time data analysis enables efficient ride allocation and reduces wait times for customers.

Use Cases for Distributed Computing in Various Industries

Amazon

In 2001, Amazon significantly transitioned from its monolithic architecture to Amazon Web Servers (AWS), establishing itself as a pioneer in adopting microservices.

This strategic move enabled Amazon to embrace a “continuous development” approach, facilitating incremental enhancements to its website’s functionality.

Consequently, new features, which previously required weeks for deployment, were swiftly made available to customers within days or even hours.

SoundCloud

In 2012, SoundCloud shifted to a distributed architecture, empowering teams to build Scala, Clojure, and JRuby apps. This move from a monolithic Rails system allowed the running of numerous services, driving innovation.

The microservices strategy provided autonomy, breaking the backend into focused, decoupled services. Adopting a backend-for-frontend pattern overcame challenges with the microservice API infrastructure.

Lessons Learned and Best Practices

Big Data and Distributed Computing are essential for the processing and analysing of massive datasets. They offer scalability, performance, and real-time capabilities. Embracing modern technologies and understanding data challenges are crucial to success.

Data security, privacy, and hybrid cloud solutions are essential considerations. Successful use cases like Netflix and Uber provide valuable insights for organisations.

Conclusion

Data science and analytics have undergone a paradigm shift as a result of the convergence of Big Data and Distributed Computing. By overcoming traditional limits, these cutting-edge technologies have fundamentally altered how we process and evaluate enormous datasets.

The Postgraduate Programme in Data Science and Analytics at Imarticus Learning is an excellent option for aspiring data professionals looking for a data scientist course with a placement assistance.

Graduates can handle real-world data difficulties thanks to practical experience and industry-focused projects. The data science online course with job assistance offered by Imarticus Learning presents a fantastic chance for a fulfilling and prosperous career in data analytics at a time when the need for qualified data scientists and analysts is on the rise.

Visit Imarticus Learning for more information on your preferred data analyst course!

These all are some promotional activities which the companies do by making use of big data. Big data influences the decision-making of the promotional activities of a company.

These all are some promotional activities which the companies do by making use of big data. Big data influences the decision-making of the promotional activities of a company.

A

A

Enterprises now a days prefer the employees with the experience of working on the cloud platforms like Amazon Web Services etc. Sound knowledge of Data warehousing and Data modelling is also given a lot of preference these days.

Enterprises now a days prefer the employees with the experience of working on the cloud platforms like Amazon Web Services etc. Sound knowledge of Data warehousing and Data modelling is also given a lot of preference these days. Pipe-line centric Data Engineers work in coherence with Data Scientists to utilize their collected Data. Database-centric Data Engineers manages the Data-flow and database analytics.

Pipe-line centric Data Engineers work in coherence with Data Scientists to utilize their collected Data. Database-centric Data Engineers manages the Data-flow and database analytics.