Linear regression is a technique for the analysis of data that is statistical in nature. It is used to determine the nature and extent of the linear dependence between independent variables and a dependent variable.

The two kinds of linear regression are

· Simple linear regression

· Multiple linear regression

Both use a single dependent variable. When the dependent variable is predicted from a single independent variable it is called simple linear regression. When the dependent variable is predicted using multiple independent variables it is called multiple linear regression.

Data Considerations in Linear Regression:

There are many requirements of the data to qualify for use in linear regression. Almost always the dependent variable uses a scale of continuous measurement ( Ex: test scores from 1 to 50). The independent variable scale could be continuous or category wise. (Ex: Girls Vs Boys).

Linear Regression and Correlation:

Regression analysis is normally used to make predictions. Correlation and simple linear regression are alike since both establish the extent of the linear relationship between the dependent and independent variables. While linear regression defines the variables as dependent and independent, the correlation makes no such differentiation. Further linear regression always predicts the dependent variable as against the independent variables be it one or many.

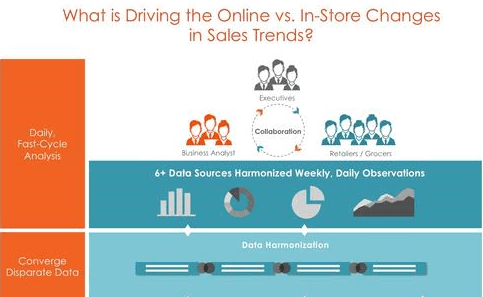

Here are some of the uses of linear regression.

1. Defines relationships:

Regression analysis can be used for the following tasks where relationships are very tangled and complex. Like

- Multiple independent variables modeling.

- Use for analysis categorical or common variables.

- Model curvature from polynomials.

- Analyze the effects of interaction and find the extent of the dependence of the independent variables on other variables.

2. Control the variables:

Regression can control statistically every model variable. To make a regression analysis the variable’s role need to be isolated from the other variables and their roles. This means one must reduce the confounding variables effects on the variable. This is achieved by keeping the values of all other independent variables constant and then evaluating the linear simple regression analysis of the dependent variable against one independent variable only.

Thus the model will only evaluate the relationship between these two variables while effectively isolating the other variables. To control the other confounding variables in the regression analysis one needs to only include them in the model and hold the other variables at a constant value.

Let us look at an example to understand the practicality of linear regression analysis. A regression analysis study on mortality from coffee drinking was recently conducted. The analysis showed that higher the coffee intake higher was the risk of dying for the excessive coffee drinker. The initial model had however not included the fact that a large number of coffee drinkers also smoked.

Once included the regression analysis actually determined that normal coffee drinking did not raise the risk of death but actually decreases the mortality rate. Smoking, on the other hand, did increase mortality rates and the risk of death was higher with increased smoking. This is a good example of the technique of role isolation of the variables holding the other variable in the model constant.

Through this one example, we are able to study the effects of coffee drinking on the mortality rate while holding the variable of smoking constant and also studying the effects of smoking on the mortality rate when holding coffee drinking or the other variable constant.

In addition to the above findings, the regression study demonstrates how the exclusion of just one variable that is relevant to the model can lead to misleading and contradictory results. It is hence crucial that the model includes all relevant variables, isolates the roles of each variable and also controls the role of the variables effectively for linear regression results to be true and accurate.

Omitting variables and uncontrolled variables can cause the model to be biased and unbalanced. To reduce such bias a process of randomization is applied to true-life analysis experiments where the effects of the variables are equally distributed to ensure the biasing by the omitted variables.

Conclusion:

Regression analysis can be very effective in predictive models. If you would like to learn more about this subject you can do a data science course at Imarticus Learning where you will ace this subject and also learn to use the technique to real-life situations.