Machine Learning needs to be supervised for the computers to effectively and efficiently utilise their time and efforts. One of the top ways to do it is through linear regression and here’s how.

Even the most deligent managers can make mistakes in organisations. But today, we live in a world where automation powers most industries, thereby reducing cost, increasing efficiency, and eliminating human error. The rising application of machine learning and artificial intelligence dominates this. So, what gives machines the ability to learn and understand large volumes of data? It is through the learning methodologies such as linear regression with the help of a dedicated data science course.

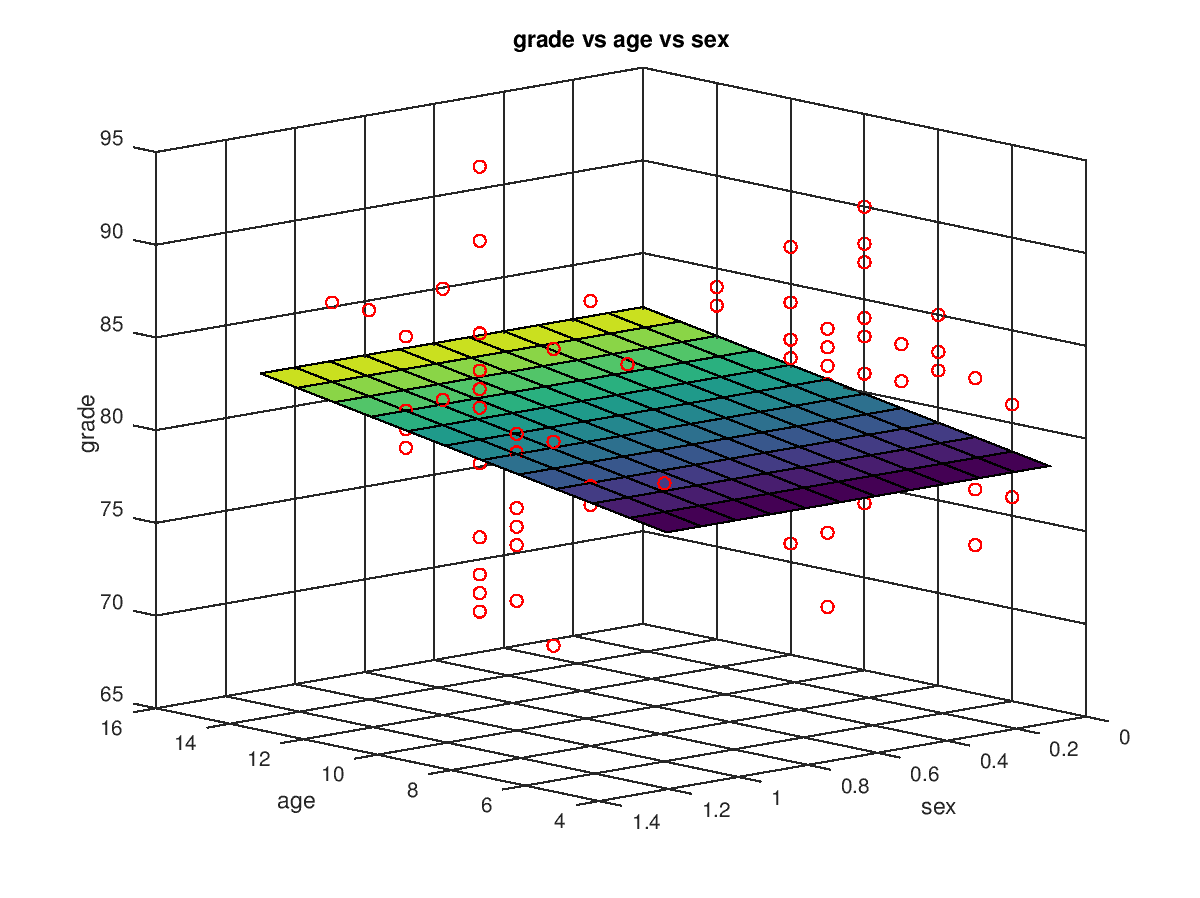

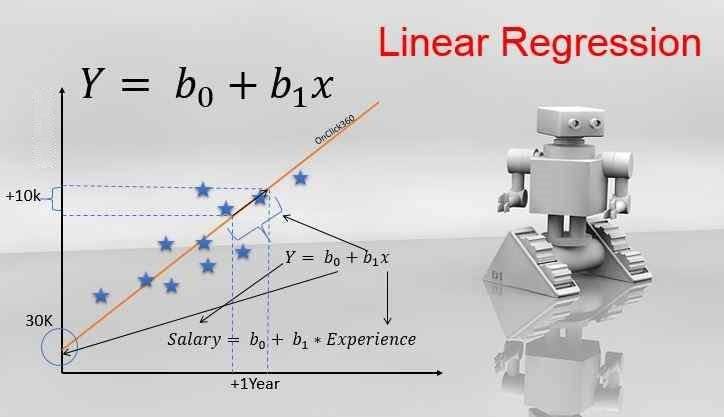

So, what is linear regression? Simply put, machines must be supervised to effectively learn new things. Linear regression is a machine learning algorithm that enables this. Machines’ biggest ability is learning about problems and executing solutions seamlessly. This greatly reduces and eliminates human error.

It is also used to find the relationship between forecasting and variables. A task is performed based on a dependable variable by analyzing the impact of an independent variable on it. Those proficient in programming software such as Python, C can sci-kit learn the library to import the linear regression model or create their custom algorithm before applying it to the machines. This means that it is highly customisable and easy to learn. Organizations worldwide are heavily investing in linear regression training for their employees to prepare the workforce for the future.

The top benefits of linear regression in machine learning are as follows.

Forecasting

A top advantage of using a linear regression model in machine learning is the ability to forecast trends and make feasible predictions. Data scientists can use these predictions and make further deductions based on machine learning. It is quick, efficient, and accurate. This is predominantly since machines process large volumes of data and there is minimum human intervention. Once the algorithm is established, the process of learning becomes simplified.

Beneficial to small businesses

By altering one or two variables, machines can understand the impact on sales. Since deploying linear regression is cost-effective, it is greatly advantageous to small businesses since short- and long-term forecasts can be made for sales. Small businesses can plan their resources well and create a growth trajectory. They will also understand the market and its preferences and learn about supply and demand.

Preparing Strategies

Since machine learning enables prediction, one of the biggest advantages of a linear regression model is the ability to prepare a strategy for a given situation well in advance and analyse various outcomes. Meaningful information can be derived from the forecasting regression model, helping companies plan strategically and make executive decisions.

Conclusion

Linear regression is one of the most common machine learning processes in the world and it helps prepare businesses in a volatile and dynamic environment. At Imarticus Learning we have a dedicated data science course for all the aspiring data scientists, data analysts like you.

Frequently Asked Questions

Why should I go for a data science course?

The field of data science has the potential to enhance our lifestyle and professional endeavours, empowering individuals to make more informed decisions, tackle complex problems, uncover innovative breakthroughs, and confront some of society’s most critical challenges. A career in data science positions you as an active contributor to this transformative journey, where your skills can play a pivotal role in shaping a better future.

What is a data science course in general?

Data science encompasses studying and analysing extensive datasets through contemporary tools and methodologies, aiming to unveil concealed patterns, extract meaningful insights, and facilitate informed business decision-making. Intricate machine learning algorithms are leveraged to construct predictive models within this domain, showcasing the dynamic intersection of data exploration and advanced computational techniques.

What is the salary in a data science course?

In India, the salary for Data Scientists spans from ₹3.9 Lakhs to ₹27.9 Lakhs, with an average annual income of ₹14.3 Lakhs. These salary estimates are derived from the latest data, considering inputs from 38.9k individuals working in Data Science.

It is a fact that with increased intelligence and ability to perform tasks with accuracy, over the next few years it is predicted that close to three million workers will be reporting to or will be supervised by “Robot-bosses”.

It is a fact that with increased intelligence and ability to perform tasks with accuracy, over the next few years it is predicted that close to three million workers will be reporting to or will be supervised by “Robot-bosses”.