Understanding the complexities of data-collecting methods is critical for anybody interested in harnessing the power of data. This blog intends to look into and clarify the various approaches used in data collection and preparation.

The importance of gathering data effectively cannot be stressed enough. It serves as the foundation for essential thoughts and conclusions. Data validity is crucial for scientific research, market analysis, and policy development.

This blog will look at various data-collecting methods, such as surveys and interviews, alongside observational research and data mining. The blog demystifies the complexity of data collecting, providing readers with a thorough guide to help them in their search for reliable and relevant data.

If you want to pursue a career in data science or take up a data analytics certification course, you can use this blog to understand various data collection methods.

What is Data Collection?

Data collection is a systematic means of gathering and combining information or data from numerous sources for purposes of analysis, research, or decision-making. It is a vital stage in both professional and academic settings, laying the groundwork for significant discoveries and informed choices.

Data collection is the envisioned and systematic acquisition of data, which includes numerical facts, qualitative details, or other pertinent information. These data can be obtained using primary methods such as surveys, interviews, and experiments, and via secondary sources such as existing databases, literature studies, and historical records.

To ensure the validity, dependability, and relevance of the obtained data, the procedure requires meticulous preparation and execution. This approach incorporates principles of ethics, data security, and quality control techniques.

Data collecting is an initial step on the road toward understanding complicated events, finding patterns, making evidence-based decisions, and increasing knowledge in a variety of domains. Its significance cannot be emphasised, since the quality of obtained data has a significant impact on the validity and reliability of future studies and results.

Different Methods of Data Collection

If one wants to pursue a data science course, one should know the different methods of data collection. They are:

1. Primary Data Collection

Primary data gathering is a qualitative way of data collecting. It entails acquiring fresh and unique information directly from sources such as surveys, interviews, observations, or experiments. This method allows researchers to personalise data gathering to their individual requirements and study objectives, assuring data accuracy and relevance while minimising any biases that might occur when using pre-existing data sources.

2. Secondary Data Collection

Secondary data collection entails acquiring previously collected information from sources such as published research papers, government reports, and databases. This strategy is used by researchers to analyse and understand current data without collecting new data. It provides insights into historical patterns, comparisons, and large-scale statistical analysis, making it a powerful tool for informed decision-making and study validation in a variety of sectors ranging from academia to industry.

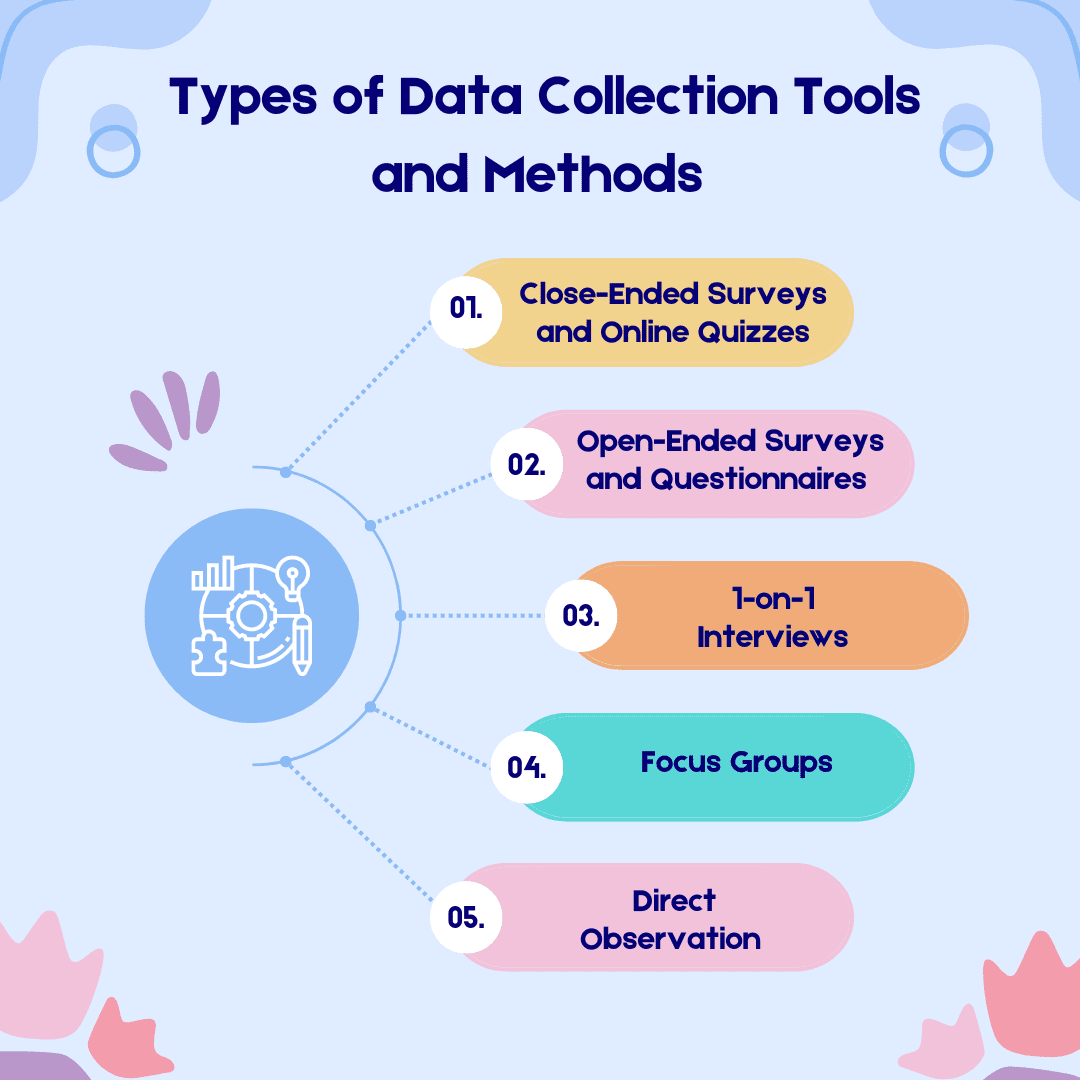

Types of Data Collection Tools and Methods

Data analytics course and data science training comes with various methods and tools for data collection. If one aspires to become a data analyst and take up a data analytics certification course, these methods would help them immensely.

1) Close-Ended Surveys and Online Quizzes

Closed-ended surveys and online quizzes are data-collecting methods that employ structured questions with preset answer alternatives. Participants select from these alternatives, which simplifies data analysis. Closed-ended questionnaires are often used in market research and consumer feedback.

Online quizzes, which are often used in education and evaluations, effectively gather data and offer immediate responses. Both strategies are useful for acquiring quantitative data in a timely and efficient manner.

2. Open-Ended Surveys and Questionnaires

Questionnaires and open-ended surveys are significant techniques of data collection. They pose open-ended questions that stimulate comprehensive, free-text replies, resulting in rich qualitative data. These strategies are used by researchers to gather in-depth insights, opinions, and viewpoints on complicated issues. They are useful for exploratory research, qualitative analysis, and revealing unexpected discoveries because, unlike closed-ended questions, they allow for flexibility and investigation of participant opinions.

3. 1-on-1 Interviews

One-on-one interviews are an effective method for collecting data. They entail an experienced interviewer conversing with a single respondent, asking predefined questions or delving into certain themes. This strategy yields rich qualitative data, revealing personal experiences, views, and feelings. One-on-one interviews are commonly used in social sciences, market research, and qualitative investigations because they provide a thorough knowledge of individual viewpoints and nuanced information.

4. Focus Groups

Focus groups are a qualitative data-collecting method in which a moderator leads a small group of participants in a discussion on a particular topic or issue. This strategy generates a wide range of viewpoints, ideas, and insights. Focus groups are very effective for investigating complicated social problems, customer behaviour, or product feedback. They give detailed qualitative data that assists in understanding underlying motives, attitudes, and views, allowing for more informed decision-making and research findings.

5. Direct Observation

Direct observation is a type of data-collecting method in which researchers watch and document events, behaviours, or phenomena as they occur. This method provides real-time, unfiltered insights into the activities of individuals, making it useful in domains such as psychology, anthropology, and market research. It reduces reliance on self-reports from participants and improves data accuracy. Structured procedures are used by researchers to methodically record and analyse observations, assuring objectivity and reproducibility.

Ethical Considerations in Data Collection Methods

To comply with Indian legislation, ethical concerns in data-gathering procedures are critical. Researchers must acquire informed permission from participants, ensuring that they understand the goal of the study and any potential dangers.

Privacy and secrecy must be strictly adhered to, by legislative laws such as the Information Technology Act and the Personal Data Protection Bill. Furthermore, data anonymisation and secure storage practises are critical for safeguarding sensitive information. Maintaining ethical standards in data collecting creates confidence, ensures legal compliance, and protects the rights and dignity of all engaged.

Conclusion

The art of data collection and preparation is an indispensable skill needed to sustain in this data-driven environment. It enables individuals and organisations to get useful insights, make educated decisions and advance in a variety of disciplines. They leverage the power of data to design a more informed future by mastering these approaches and sticking to best practices.

If you wish to become a data analyst and have a budding career in data science, check out Imarticus Learning’s Postgraduate Program In Data Science And Analytics. You will get 100% job assurance with this data science course and learn in detail about various data collection methods.

To know more, check out the website right away.