Data storytelling is a methodology used to convey information to a specific audience with a narrative. It makes the data insights understandable to fellow workers by using natural language statements & storytelling. Three key elements which are data, visuals, and narrative are combined & used for data storytelling.

The data analysis results are converted into layman’s language via data storytelling so that the non-analytical people can also understand it. Data storytelling in a firm keeps the employees more informed and better business decisions can be made. Let us see more about how data storytelling is an important analytical skill & how it will help in building a successful Big Data Career.

Benefits of Data Storytelling

The benefits of data storytelling are as follows:

- Stories have always been an important part of human civilization. One can understand the context better via a story. Complex data sets can be visualized and then data insights can be shared simply through a story to non-analytical people too.

- Data storytelling helps in making informed decisions & stakeholders can understand the insights via Data storytelling and you can compel them to make a decision.

- Data analytics is about numbers and insights but with data storytelling, you make your data analytics results more interesting.

- The risks associated with any particular process can be explained to the stakeholders, employees in simple terms.

- According to reports, more data is produced from 2013 than produced in all human history. To manage this big data and to make data insights accessible to all, data storytelling is a must.

Tips for Making a Better Data Story

- If you are running an organization, make sure to involve stakeholders/investors in data storytelling. This helps in increasing clarity in communication and they do not find a lack of information.

- Make sure to embed numerical values with interesting plots for a data story. Our brains are designed to conceive visual information faster. Only numerical insights will make the data story boring and more complex to understand. The data insights should be conveyed in a layman’s language through a data story.

- Data visualization should be used for data storytelling but it should not hide the critical highlights in the data set.

- Make sure you imbibe all the three aspects of data storytelling which are visuals, data & narrative. The excess of any attribute can hamper the effectiveness of your data story.

- The outliers/exception in the data set should be analyzed and included in your data story.

The Growing Need for Data Storytelling

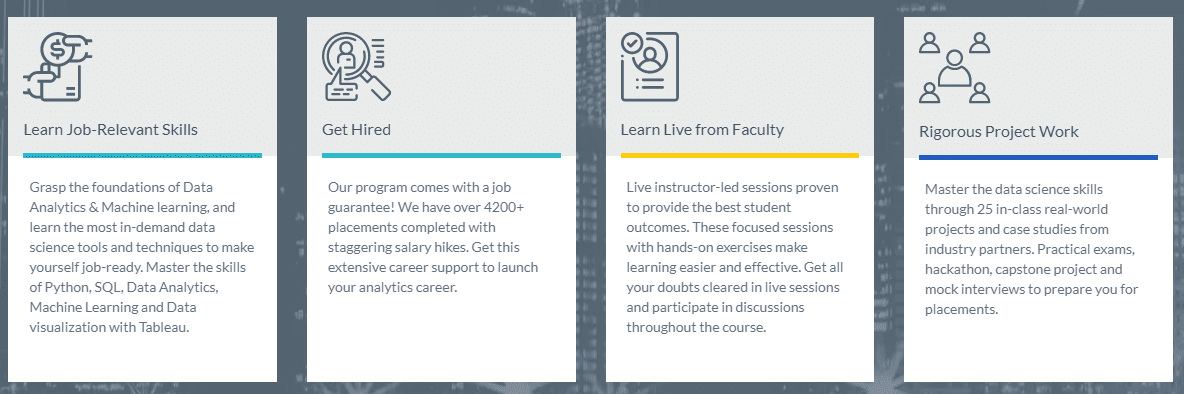

New ways of data analytics like augmented analysis, data storytelling, etc. are surging a lot in recent days due to the high rate of data production by firms/businesses. One can learn analytical skills from a Data Analytics course from Imarticus Learning. To build a successful Big Data Career, you will need to learn these new concepts in data analytics.

Conclusion

Conclusion

Imarticus Learning is one of the leading online course providers in the country. You can learn key skills via a Data Analytics course from industry experts provided by Imarticus Learning. Start learning data storytelling now!