With Amazon’s facial-recognition, face-IDs, use of facial-recognition at airports and on smart-phones, the police use of TASERs to immobilize suspects, and voice-cloning apps, the peer-to-peer networks aim of creating a transparent data system through increased usage of AI seems to have been accepted widely.

Artificial Intelligence applications have scored for their ease of operations; quick and unbelievable data-processing, identification capabilities, and flexible application amend-abilities.

The question of transparency has however been oft-discussed and flouted with impunity in instances of protecting privacy, ethical, legal and misuse issues. Selling of data to third parties, forced use of facial recognition, misuse of voice-cloning, and excessive use of TASERs did not result in data accountability. It appears to have become a nagging fear of constant governmental-surveillance and has come close to defeating the very purpose of its creation of transparency.

The following trends in 2018 may be important in the use of AI and transparent use of data which presently globally governments, countries and companies are vying to harness and control.

AI becomes the political focus

Some argue AI creates jobs while others claim to have lost work because of AI. A case in point is self-driven trucks and cars where more than 25 thousand workers become unemployed annually as per CNBC reports. The same is true in large depots working with very few employees. If the 2016 campaign of President Trump was about immigration and globalization, the midterms of 2018 would focus on rising unemployment due to the use of AI.

Peer-to-peer transparent networks will use blockchains

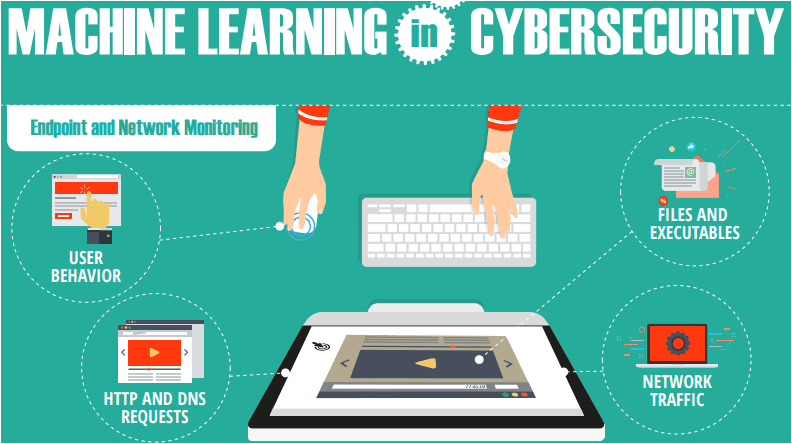

ML and AI used together are becoming useful in apps like Google, Facebook etc. where computing power and enormous data is processed in fractions of seconds to enable decision making. However, transparency in the decision making has been under a cloud and not in the control of users.

Peer-to-peer networks using block-chain technology transformed the financial sectors and are set to revitalize small industries and financial organisations functioning transparently. Ex: Presearch makes use of AI peer-to-peer networking to induce transparency in search-engines.

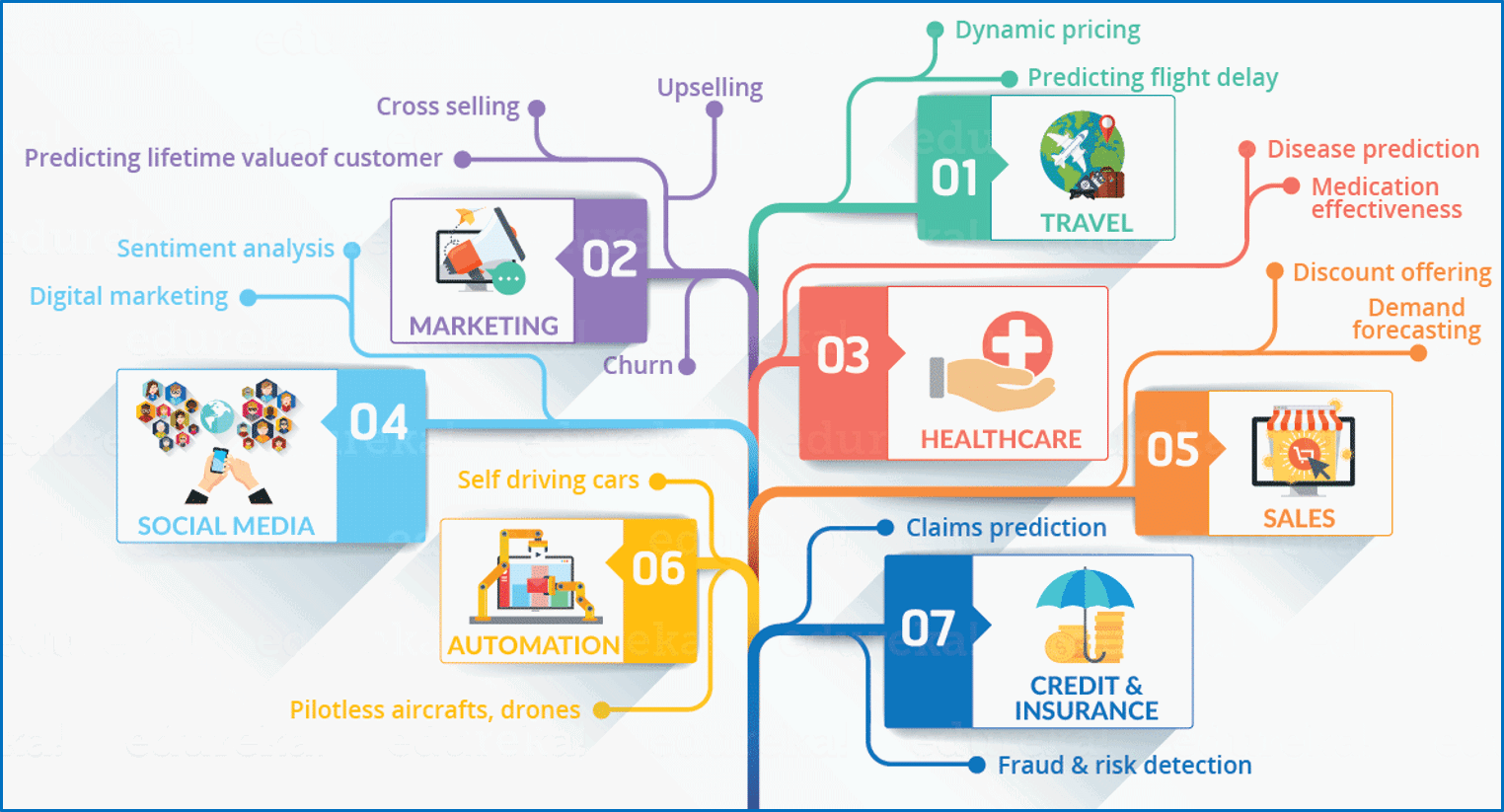

Other interesting trends using peer-to-peer networks and AI set to overhaul efficiency, transparency, productivity and profits are

Logistics and deliveries efficiency set to increase.

Self-driving cars rock.

Robo-cops will take-on action.

Content creation through AI.

Consumers and technology to become buddies.

Data scientists will rule in demand over engineers.

ML to aid and not replace workers.

AI will aid the health sector development.

The use of Siri, Alexa, and Google Assistant show that they use AI which currently understands advanced conversational nuances. The creation of robots, chatbots and such have raised questions of immortality, displacement of workers, and whether ML can be controlled at all to get machines to do what we humans tell them to do? It has become an issue of human wisdom vs AI- intelligence debate. Morality issues, misuse of intelligence, and subjective-experiences in humans allow us to feel, be ethical and transparent in use of AI intelligence data.

In conclusion, one must agree that the increased use of peer-to-peer networks, AI, ML, data analytics and predictive technologies are here to stay and can lead to increased transparency in data-transactions across sectors. Human wisdom and morality will be the traits that set us, humans, apart from our intelligent creations whose data-processing and learning capabilities and potential can fast spin out-of-control when these traits are not used to restrain AI.