3 Tips on Building a Successful Online Course in Data Science!

The coronavirus pandemic is undoubtedly one of the biggest disruptors of lives and livelihoods this year. Thousands of businesses, shops and universities have been forced to shut down to curb the spread of the virus; as a result, massive numbers have turned to their home desks to work from and to tide over the crisis.

The pandemic has also influenced the surge of a new wave of interest in online courses. Over the past few months, many small and large-scale ed-tech companies have sprouted up, bombarding the masses with a wider range of choices than ever before. Many institutions have chosen to give out their courses at a minimal price and yet others for free. The format of these classes is different– hands-on, theoretical, philosophical, or interactive– but the ultimate goal is to take learning online and democratize it.

Naturally, it’s an opportune time to explore the idea of creating an online course– a data science online course, in particular, seeing as futuristic technologies will see a profound surge in attention come the next few years.

Here are a few tips to get the ball rolling on your first-ever online course in data science:

- Create a Curriculum

Data science is a nuanced and complex field, so it won’t do to use the term in its entirety. It is important to think up what the scope of your course will be. You will need to identify what topics you will cover, what industry you want to target (if any), what tools you might need to talk about, and how best to deliver your course content to engage students.

General courses are ideal for beginners who don’t know the first thing about data science. This type, of course, could cover the scope of the term, the industries it’s used in as well as job opportunities and must-have skills for aspirants.

Technical courses can take one software and break it down– this is also a great space to encourage experiments and hands-on projects. Niche courses can deal with the use and advantage of data science within a particular industry, such as finance or healthcare.

- Choose a Delivery Method

There are a plethora of ed-tech platforms to choose from, so make a list of what is most important to you, so you don’t get overwhelmed. Consider how interactive you can make it, through the use of:

- Live videos

- Video-on-demand

- Webinars

- Panels

- Expert speakers

- Flipped classroom

- Peer reviews

- Private mentorship

- Assessments

- Hackathons

The primary draw of online classrooms is also how flexible they are. Consider opting for a course style that allows students to learn at their own pace and time. Simultaneously, make use of the course styles listed above to foster a healthily competitive learning environment.

- Seek Industry Partnerships

An excellent way to up the ante on your course and set it apart from regular platforms is to partner with an industry leader in your selected niche. This has many advantages– it lends credibility to your course, brings in a much-needed insider perspective and allows students to interact outside of strict course setups. Additionally, the branding of an industry leader on your certification is a testament to the value of your course; students are more likely to choose a course like yours if this certification is pivotal in their career.

Other ways by which you can introduce an industry partnership include inviting company speakers, organising crash courses on industry software and even setting up placement interviews at these companies. The more you can help a student get their foot in the door, the higher the chances of them enrolling and recommending.

Other ways by which you can introduce an industry partnership include inviting company speakers, organising crash courses on industry software and even setting up placement interviews at these companies. The more you can help a student get their foot in the door, the higher the chances of them enrolling and recommending.

Conclusion

Building an online course in data science is no mean feat. However, it’s a great time to jump into the ed-tech and online learning industry, so get ready to impart your knowledge!

Data literacy gives people the power and the evidential backing to call out those intentionally or unintentionally propagating mistruths and fallacies through awry statistics. This way, data literacy plays a pivotal part in politics, economics and ethics of a society, indeed of the world.

Data literacy gives people the power and the evidential backing to call out those intentionally or unintentionally propagating mistruths and fallacies through awry statistics. This way, data literacy plays a pivotal part in politics, economics and ethics of a society, indeed of the world.

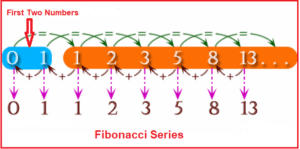

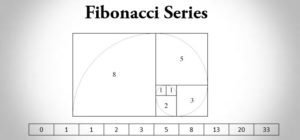

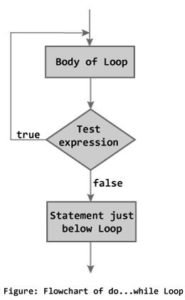

Fibonacci using While Loop

Fibonacci using While Loop Application of Fibonacci Series

Application of Fibonacci Series