The worldly functions are now majorly changing with data usage. It has a wide spectrum of usage starting from the company's revenue strategy to disease cures and many more. It is also a great flagbearer to get targeted ads on your social media page. In short, data is now dominating the world and its functions.

But the question arises, what is data? Data primarily refers to the information that is readable by the machine, unlike humans. Hence, it makes the process easier which enhances the overall workforce dynamic.

Data works in various ways, however, it is of no use without data modelling, data engineering and of course, Machine Learning. This helps in assigning relational usage to data. These help in uncomplicating data and segregating them into useful information which would come in handy when it comes to decision making.

The Role of Data Modeling and Data Engineering in Data Science

Data modelling and data engineering are one of the essential skills of data analysis. Even though these two terms might sound synonymous, they are not the same.

Data modelling deals with designing and defining processes, structures, constraints and relationships of data in a system. Data engineering, on the other hand, deals with maintaining the platforms, pipelines and tools of data analysis.

Both of them play a very significant role in the niche of data science. Let's see what they are:

Data Modelling

- Understanding: Data modelling helps scientists to decipher the source, constraints and relationships of raw data.

- Integrity: Data modelling is crucial when it comes to identifying the relationship and structure which ensures the consistency, accuracy and validity of the data.

- Optimisation: Data modelling helps to design data models which would significantly improve the efficiency of retrieving data and analysing operations.

- Collaboration: Data modelling acts as a common language amongst data scientists and data engineers which opens the avenue for effective collaboration and communication.

Data Engineering

- Data Acquisition: Data engineering helps engineers to gather and integrate data from various sources to pipeline and retrieve data.

- Data Warehousing and Storage: Data engineering helps to set up and maintain different kinds of databases and store large volumes of data efficiently.

- Data Processing: Data engineering helps to clean, transform and preprocess raw data to make an accurate analysis.

- Data Pipeline: Data engineering maintains and builds data pipelines to automate data flow from storage to source and process it with robust analytics tools.

- Performance: Data engineering primarily focuses on designing efficient systems that handle large-scale data processing and analysis while fulfilling the needs of data science projects.

- Governance and Security: The principles of data engineering involve varied forms of data governance practices that ensure maximum data compliance, security and privacy.

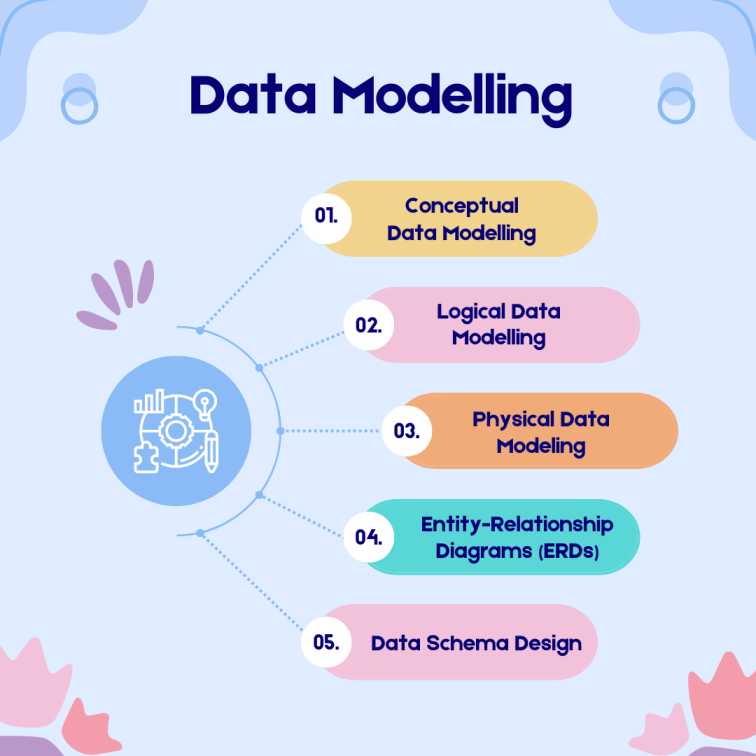

Understanding Data Modelling

Data modelling comes with different categories and characteristics. Let's learn in detail about the varied aspects of data modelling to know more about the different aspects of the Data Scientist course with placement.

Conceptual Data Modelling

The process of developing an abstract, high-level representation of data items, their attributes, and their connections is known as conceptual data modelling. Without delving into technical implementation specifics, it is the first stage of data modelling and concentrates on understanding the data requirements from a business perspective.

Conceptual data models serve as a communication tool between stakeholders, subject matter experts, and data professionals and offer a clear and comprehensive understanding of the data. In the data modelling process, conceptual data modelling is a crucial step that lays the groundwork for data models that successfully serve the goals of the organisation and align with business demands.

Logical Data Modelling

After conceptual data modelling, logical data modelling is the next level in the data modelling process. It entails building a more intricate and organised representation of the data while concentrating on the logical connections between the data parts and ignoring the physical implementation details. Business requirements can be converted into a technical design that can be implemented in databases and other data storage systems with the aid of logical data models, which act as a link between the conceptual data model and the physical data model.

Overall, logical data modelling is essential to the data modelling process because it serves as a transitional stage between the high-level conceptual model and the actual physical data model implementation. The data is presented in a structured and thorough manner, allowing for efficient database creation and development that is in line with business requirements and data linkages.

Physical Data Modeling

Following conceptual and logical data modelling, physical data modelling is the last step in the data modelling process. It converts the logical data model into a particular database management system (DBMS) or data storage technology. At this point, the emphasis is on the technical details of how the data will be physically stored, arranged, and accessed in the selected database platform rather than on the abstract representation of data structures.

Overall, physical data modelling acts as a blueprint for logical data model implementation in a particular database platform. In consideration of the technical features and limitations of the selected database management system or data storage technology, it makes sure that the data is stored, accessed, and managed effectively.

Entity-Relationship Diagrams (ERDs)

The relationships between entities (items, concepts, or things) in a database are shown visually in an entity-relationship diagram (ERD), which is used in data modelling. It is an effective tool for comprehending and explaining a database's structure and the relationships between various data pieces. ERDs are widely utilised in many different industries, such as data research, database design, and software development.

These entities, characteristics, and relationships would be graphically represented by the ERD, giving a clear overview of the database structure for the library. Since they ensure a precise and correct representation of the database design, ERDs are a crucial tool for data modellers, database administrators, and developers who need to properly deploy and maintain databases.

Data Schema Design

A crucial component of database architecture and data modelling is data schema design. It entails structuring and arranging the data to best reflect the connections between distinct entities and qualities while maintaining data integrity, effectiveness, and retrieval simplicity. Databases need to be reliable as well as scalable to meet the specific requirements needed in the application.

Collaboration and communication among data modellers, database administrators, developers, and stakeholders is the crux data schema design process. The data structure should be in line with the needs of the company and flexible enough to adapt as the application or system changes and grows. Building a strong, effective database system that effectively serves the organization's data management requirements starts with a well-designed data schema.

Data Engineering in Data Science and Analytics

Data engineering has a crucial role to play when it comes to data science and analytics. Let's learn about it in detail and find out other aspects of data analytics certification courses.

Data Integration and ETL (Extract, Transform, Load) Processes

Data management and data engineering are fields that need the use of data integration and ETL (Extract, Transform, Load) procedures. To build a cohesive and useful dataset for analysis, reporting, or other applications, they play a critical role in combining, cleaning, and preparing data from multiple sources.

Data Integration

The process of merging and harmonising data from various heterogeneous sources into a single, coherent, and unified perspective is known as data integration. Data in organisations are frequently dispersed among numerous databases, programmes, cloud services, and outside sources. By combining these various data sources, data integration strives to create a thorough and consistent picture of the organization's information.

ETL (Extract, Transform, Load) Processes

ETL is a particular method of data integration that is frequently used in applications for data warehousing and business intelligence. There are three main steps to it:

- Extract: Databases, files, APIs, and other data storage can all be used as source systems from which data is extracted.

- Transform: Data is cleaned, filtered, validated, and standardised during data transformation to ensure consistency and quality after being extracted. Calculations, data combining, and the application of business rules are all examples of transformations.

- Load: The transformed data is loaded into the desired location, which could be a data mart, a data warehouse, or another data storage repository.

Data Warehousing and Data Lakes

Large volumes of organised and unstructured data can be stored and managed using either data warehousing or data lakes. They fulfil various needs for data management and serve varied objectives. Let's examine each idea in greater detail:

Data Warehousing

A data warehouse is a centralised, integrated database created primarily for reporting and business intelligence (BI) needs. It is a structured database designed with decision-making and analytical processing in mind. Data warehouses combine data from several operational systems and organise it into a standardised, query-friendly structure.

Data Lakes

A data lake is a type of storage facility that can house large quantities of both organised and unstructured data in its original, unaltered state. Data lakes are more adaptable and well-suited for processing a variety of constantly changing data types than data warehouses since they do not enforce a rigid schema upfront.

Data Pipelines and Workflow Automation

Workflow automation and data pipelines are essential elements of data engineering and data management. They are necessary for effectively and consistently transferring, processing, and transforming data between different systems and applications, automating tedious processes, and coordinating intricate data workflows. Let's investigate each idea in more depth:

Data Pipelines

Data pipelines are connected data processing operations that are focused on extracting, transforming and loading data from numerous sources to a database. Data pipelines move data quickly from one stage to the next while maintaining accuracy in the data structure at all times.

Workflow Automation

The use of technology to automate and streamline routine actions, procedures, or workflows in data administration, data analysis, and other domains is referred to as workflow automation. Automation increases efficiency, assures consistency, and decreases the need for manual intervention in data-related tasks.

Data Governance and Data Management

The efficient management and use of data within an organisation require both data governance and data management. They are complementary fields that cooperate to guarantee data management, security, and legal compliance while advancing company goals and decision-making. Let's delve deeper into each idea:

Data Governance

Data governance refers to the entire management framework and procedures that guarantee that data is managed, regulated, and applied across the organisation in a uniform, secure, and legal manner. Regulating data-related activities entails developing rules, standards, and processes for data management as well as allocating roles and responsibilities to diverse stakeholders.

Data Management

Data management includes putting data governance methods and principles into practice. It entails a collection of procedures, devices, and technological advancements designed to preserve, organise, and store data assets effectively to serve corporate requirements.

Data Cleansing and Data Preprocessing Techniques

Data preparation for data analysis, machine learning, and other data-driven tasks requires important procedures including data cleansing and preprocessing. They include methods for finding and fixing mistakes, discrepancies, and missing values in the data to assure its accuracy and acceptability for further investigation. Let's examine these ideas and some typical methods in greater detail:

Data Cleansing

Locating mistakes and inconsistencies in the data is known as data cleansing or data scrubbing. It raises the overall data standards which in turn, analyses it with greater accuracy, consistency and dependability.

Data Preprocessing

The preparation of data for analysis or machine learning tasks entails a wider range of methodologies. In addition to data cleansing, it also comprises various activities to prepare the data for certain use cases.

Introduction to Machine Learning

A subset of artificial intelligence known as "machine learning" enables computers to learn from data and enhance their performance on particular tasks without having to be explicitly programmed. It entails developing models and algorithms that can spot trends, anticipate the future, and take judgement calls based on the supplied data. Let's delve in detail into the various aspects of Machine Learning which would help you understand data analysis better.

Supervised Learning

In supervised learning, the algorithm is trained on labelled data, which means that both the input data and the desired output (target) are provided. Based on this discovered association, the algorithm learns to map input properties to the desired output and can then predict the behaviour of fresh, unobserved data. Examples of common tasks that involve prediction are classification tasks (for discrete categories) and regression tasks (for continuous values).

Unsupervised Learning

In unsupervised learning, the algorithm is trained on unlabeled data, which means that the input data does not have corresponding output labels or targets. Finding patterns, structures, or correlations in the data without explicit direction is the aim of unsupervised learning. The approach is helpful for applications like clustering, dimensionality reduction, and anomaly detection since it tries to group similar data points or find underlying patterns and representations in the data.

Semi-Supervised Learning

A type of machine learning called semi-supervised learning combines aspects of supervised learning and unsupervised learning. A dataset with both labelled (labelled data with input and corresponding output) and unlabeled (input data without corresponding output) data is used to train the algorithm in semi-supervised learning.

Reinforcement Learning

A type of machine learning called reinforcement learning teaches an agent to decide by interacting with its surroundings. In response to the actions it takes in the environment, the agent is given feedback in the form of incentives or punishments. Learning the best course of action or strategy that maximises the cumulative reward over time is the aim of reinforcement learning.

Machine Learning in Data Science and Analytics

Predictive Analytics and Forecasting

For predicting future occurrences, predictive analysis and forecasting play a crucial role in data analysis and decision-making. Businesses and organisations can use forecasting and predictive analytics to make data-driven choices, plan for the future, and streamline operations. They can get insightful knowledge and predict trends by utilising historical data and cutting-edge analytics approaches, which will boost productivity and competitiveness.

Recommender Systems

A sort of information filtering system known as a recommender system makes personalised suggestions to users for things they might find interesting, such as goods, movies, music, books, or articles. To improve consumer satisfaction, user experience, and engagement on e-commerce websites and other online platforms, these techniques are frequently employed.

Anomaly Detection

Anomaly detection is a method used in data analysis to find outliers or odd patterns in a dataset that deviate from expected behaviour. It is useful for identifying fraud, errors, or anomalies in a variety of fields, including cybersecurity, manufacturing, and finance since it entails identifying data points that dramatically diverge from the majority of the data.

Natural Language Processing (NLP) Applications

Data science relies on Natural Language Processing (NLP), enabling robots to comprehend and process human language. To glean insightful information and enhance decision-making, NLP is applied to a variety of data sources. Data scientists may use the large volumes of textual information available in the digital age for improved decision-making and comprehension of human behaviour thanks to NLP, which is essential in revealing the rich insights hidden inside unstructured text data.

Machine Learning Tools and Frameworks

Python Libraries (e.g., Scikit-learn, TensorFlow, PyTorch)

Scikit-learn for general machine learning applications, TensorFlow and PyTorch for deep learning, XGBoost and LightGBM for gradient boosting, and NLTK and spaCy for natural language processing are just a few of the machine learning libraries available in Python. These libraries offer strong frameworks and tools for rapidly creating, testing, and deploying machine learning models.

R Libraries for Data Modeling and Machine Learning

R, a popular programming language for data science, provides a variety of libraries for data modelling and machine learning. Some key libraries include caret for general machine learning, randomForest and xgboost for ensemble methods, Glmnet for regularised linear models, and Nnet for neural networks. These libraries offer a wide range of functionalities to support data analysis, model training, and predictive modelling tasks in R.

Big Data Technologies (e.g., Hadoop, Spark) for Large-Scale Machine Learning

Hadoop and Spark are the main big data technologies that handle large-scale data processing. These features create the perfect platform for conducting large-scale machine learning tasks of batch processing and distributed model training to allow scalable and effective handling of enormous data sets. It also enables parallel processing, fault tolerance and distributing computing.

AutoML (Automated Machine Learning) Tools

AutoML enables the automation of various steps of machine learning workflow like feature engineering and data processing. These tools simplify the procedure of machine learning and make it easily accessible to users with limited expertise. It also accelerates the model development to achieve competitive performance.

Case Studies and Real-World Applications

Successful Data Modeling and Machine Learning Projects

Netflix: Netflix employs a sophisticated data modelling technique that helps to power the recommendation systems. It shows personalised content to users by analysing their behaviours regarding viewing history, preferences and other aspects. This not only improves user engagement but also customer retention.

PayPal: PayPal uses successful data modelling techniques to detect fraudulent transactions. They analyse the transaction patterns through user behaviour and historical data to identify suspicious activities. This protects both the customer and the company.

Impact of Data Engineering and Machine Learning on Business Decisions

Amazon: By leveraging data engineering alongside machine learning, businesses can now easily access customer data and understand their retail behaviour and needs. It is handy when it comes to enabling personalised recommendations that lead to higher customer satisfaction and loyalty.

Uber: Uber employs NLP techniques to monitor and analyse customer feedback. They even take great note of the reviews provided by them which helps them to understand brand perception and customer concern address.

Conclusion

Data modelling, data engineering and machine learning go hand in hand when it comes to handling data. Without proper data science training, data interpretation becomes cumbersome and can also prove futile.

If you are looking for a data science course in India check out Imarticus Learning's Postgraduate Programme in Data Science and Analytics. This programme is crucial if you are looking for a data science online course which would help you get lucrative interview opportunities once you finish the course. You will be guaranteed a 52% salary hike and learn about data science and analytics with 25+ projects and 10+ tools.

To know more about courses such as the business analytics course or any other data science course, check out the website right away! You can learn in detail about how to have a career in Data Science along with various Data Analytics courses.