With the era of algorithms, data science skills and tools are the pillars of every contemporary business decision. From suggesting products on e-commerce websites to predicting financial trends, data science has emerged as the driving force behind smart decisions.

For anyone looking to join this fast-paced industry, it’s not enough to only know the buzzwords. You will need to establish a strong set of skills and learn a toolset that will enable you not only to land the job—but to rock it.

This blog post deconstructs the critical data science skills, must-learn data science technologies and the leading data analyst tools you need to become proficient with to succeed in the modern workforce.

Why Data Science Became Mission-Critical

Each click, swipe, and tap creates data. What’s done with it all?

Companies that harness data are achieving faster growth, improved customer retention, and a better product-market fit. McKinsey & Company states that businesses leveraging data are 23 times more likely to get customers and 19 times more likely to become profitable.

And this isn’t global speak alone—India is racing ahead too. According to NASSCOM, India will require more than 1.5 million data professionals by 2026.

Key Data Science Skills You Need to Master

A data analyst’s skillset is half logic, half creativity, and half business instinct. Here’s what you need to be industry-ready.

1. Statistical Thinking and Analytical Mindset

Statistics is at the core of data analysis.

Ideas such as distributions, sampling, p-values, and hypothesis testing enable analysts to meaningfully interpret patterns.

It is important to know correlation vs. causation, regression models, and inferential statistics.

✅ Pro Tip: Establish a solid foundation with Python libraries such as statsmodels and SciPy.

2. Programming Languages for Data Science

There’s no way around coding. It’s your data interface.

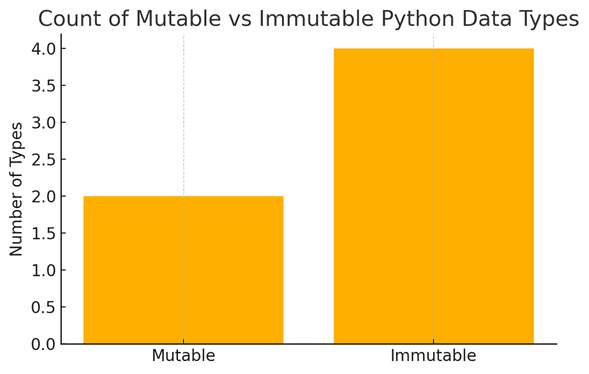

Python: The most general-purpose, utilised for data cleaning, analysis, machine learning, and deep learning.

R: Best for academic research, advanced statistical modelling, and visualisations.

SQL: Still the most requested language for querying databases.

And then there are: Julia (for high-performance computing), Scala (for Big Data), and Excel (for smaller datasets).

85% of data professionals use Python and 74% use SQL according to the Kaggle State of Data Science Report.

3. Data Cleaning and Wrangling

Raw data is not tidy. Mistakes, duplicates, missing values, typos—yep, that’s the real world.

Your data wrangling and pre-processing skills make you stand out. This includes:

- Dealing with null values

- Standardising formats

- Removing outliers

- Parsing strings and dates

Tools: Pandas, NumPy, OpenRefine

4. Data Visualisation Techniques

Raw data doesn’t talk—but pictures do.

Data storytelling is an essential soft skill for data analysts. You need to know:

- Which chart type is best for which data type

- How to prevent distortion or misrepresentation

- How to effectively use colour, scale, and layout

Tools: Tableau, Power BI, Seaborn, Plotly, Matplotlib

Effective visualisation is what tends to win data buy-in from decision-makers.

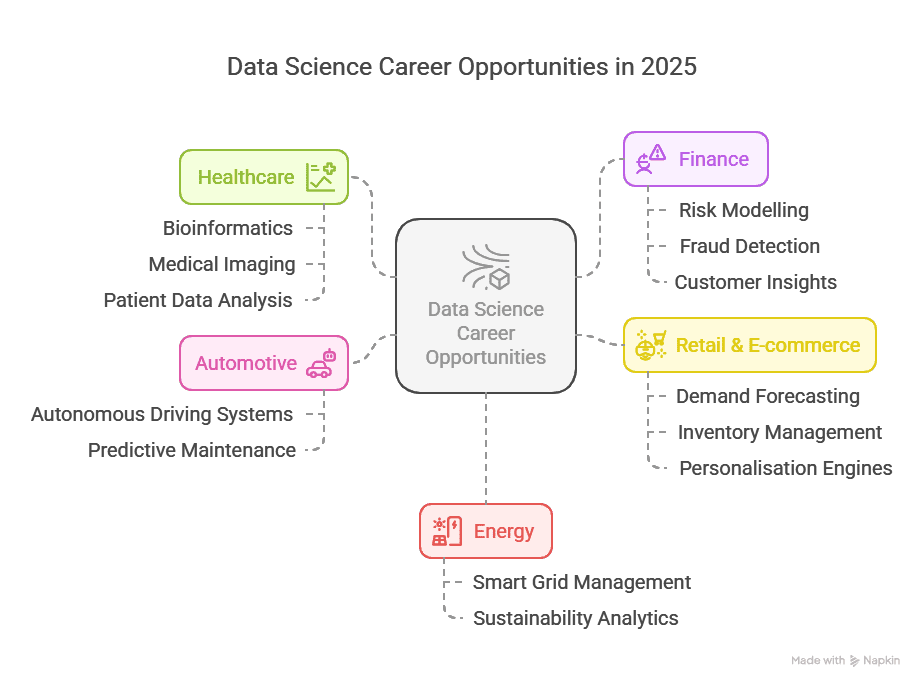

5. Machine Learning and AI Tools

Those venturing into predictive and prescriptive models from descriptive analytics make machine learning a must.

Key concepts involve:

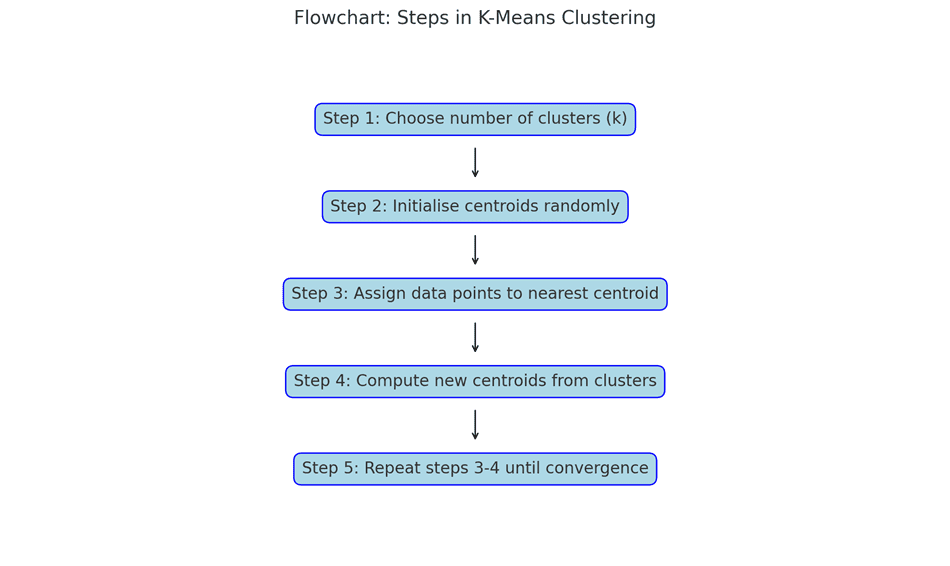

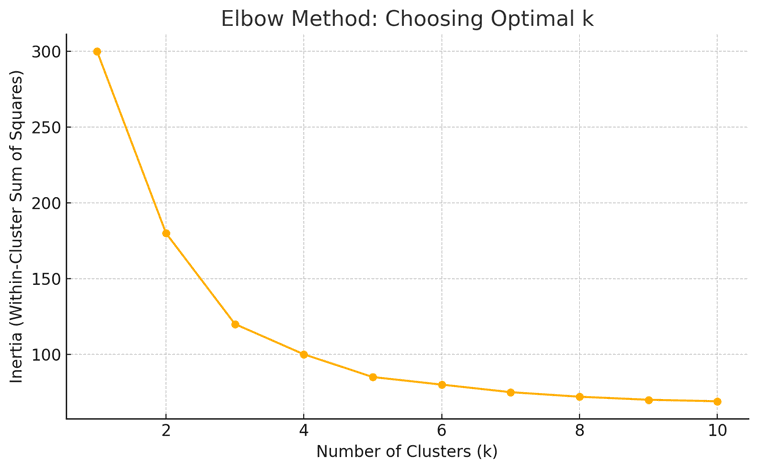

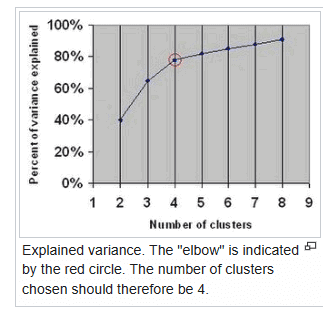

- Supervised vs. unsupervised learning

- Decision trees, random forests, logistic regression

- Model accuracy, precision, recall, F1-score

- Cross-validation, hyperparameter tuning

Well-known tools: Scikit-learn, TensorFlow, Keras, XGBoost

Bonus: Knowing how Large Language Models (LLMs) such as ChatGPT work is growing more valuable.

6. Data Ethics and Privacy Consciousness

With great power requires great responsibility.

As a data analyst, you are required to:

- Be GDPR-aware, data masking, and anonymisation-conscious

- Understand model bias and how it affects fairness

- Ensure transparency and auditability in data pipelines

Learn more from OECD’s AI Principles and India’s Digital Personal Data Protection Act

7. Big Data and Cloud Tools

For datasets with high scale, local tools won’t do.

- Learn how to use distributed computing tools such as Apache Spark and Hadoop

- Understand cloud environments such as AWS, Google Cloud Platform, and Azure

- Know how to deploy models into production environments using containers such as Docker

These are essentials for large businesses and tech-first companies.

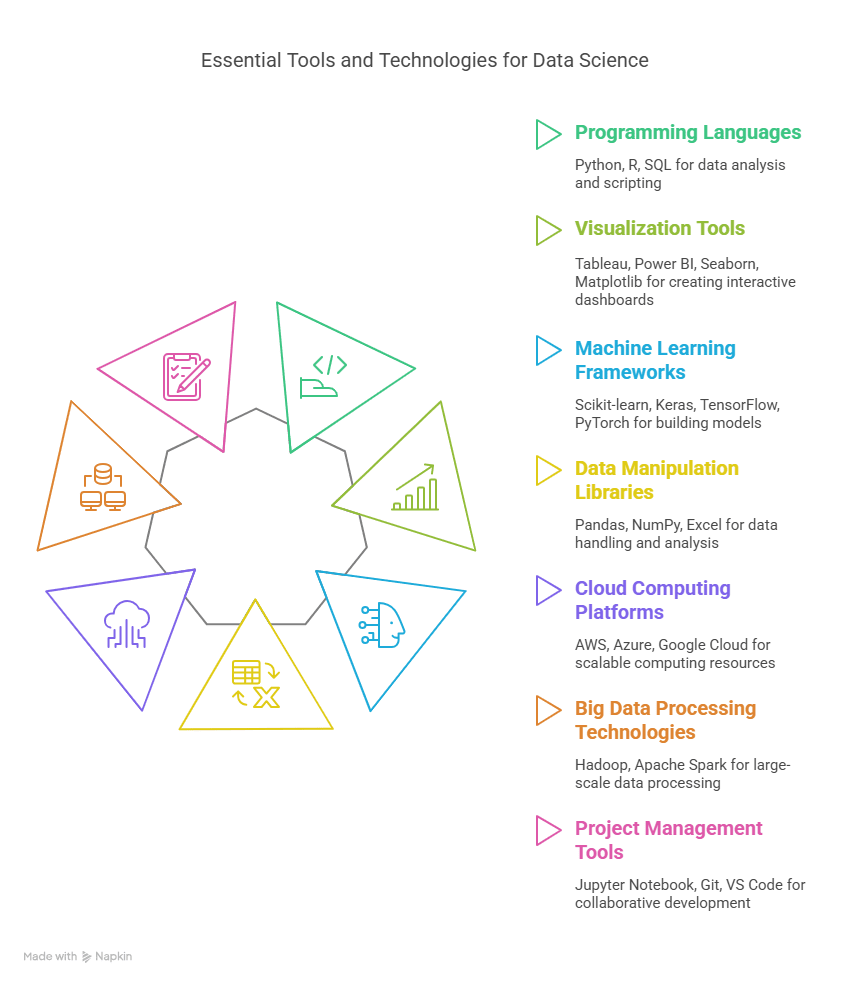

Top Tools For Data Analyst Should Know

| Category | Tools |

| Programming Languages | Python, R, SQL |

| Visualisation | Tableau, Power BI, Seaborn, Matplotlib |

| Machine Learning | Scikit-learn, Keras, TensorFlow, PyTorch |

| Data Manipulation | Pandas, NumPy, Excel |

| Cloud Computing | AWS, Azure, Google Cloud |

| Big Data Processing | Hadoop, Apache Spark |

| Project Management | Jupyter Notebook, Git, VS Code |

Continuous practice on environments such as Kaggle and Google Colab will solidify tool proficiency.

Real-World Learning > Theoretical Learning

This is the area where most students fail—they gain familiarity with the tools but do not have practical context to apply.

Imarticus Learning’s Postgraduate Program in Data Science and Analytics provides:

- 25+ actual industry projects

- 100+ hours of hands-on coding

- Tools such as Tableau, Python, SQL, Power BI, and Scikit-learn

- Specialisations in AI, Data Visualisation, and Big Data

- Career guidance with 2000+ hiring partners and job-guaranteed results

It’s a 360° makeover, not certification.

Data Scientist vs Data Analyst – Which Is Right For You? (2025)

FAQs

1. What are the essential data science skills?

Key skills are statistics, data cleaning, Python/R, machine learning, and data visualisation.

2. Which programming language will be most beneficial for data science?

Python, followed by R and SQL, are the most useful.

3. Is machine learning necessary to be a data analyst?

Not necessary, but beneficial for experienced positions and to progress into data science.

4. What software does data visualisation use?

Industry favourites include Tableau, Power BI, Seaborn, and Matplotlib.

5. Is a technology background necessary to work in data analytics?

No. Most successful analysts have a commerce, science, or humanities background.

6. What is the typical time to get job-ready?

With structured learning, 6 to 9 months is possible.

7. Do beginners need cloud computing?

Not at first, but cloud computing skills provide you with a significant advantage in enterprise roles.

8. How is data ethics important to analysts?

It helps in maintaining transparency, fairness, and privacy in analysis is an increasing industry expectation.

9. What’s the most underappreciated skill in data science?

Communication. Your work is worth only if you can tell people about it clearly and convey the message.

10. Is a certification sufficient to be hired?

Certifications are helpful, but project experience, mastery of tools, and interview preparation are more important.

Conclusion: Your Future Depends on Skill, Not Buzzwords

You can’t “wing” data science. Businesses are looking for analysts who can apply clarity to complication—and that takes thinking, not just tools.

The domain is changing and evolving rapidly. Software is becoming more intelligent, datasets are becoming larger, and hiring managers want job-ready professionals, not course completions.

Key Takeaways

- Construct a core toolkit: Python, SQL, Tableau, and Scikit-learn are the foundation of any analyst’s arsenal

- Get hands-on: Projects, case studies, and real datasets are more important than theory

- Stay updated: AI integration, ethical data usage, and cloud deployment are quickly becoming table stakes

Your Next Move: A Data Analyst with Confidence

Don’t spend time figuring it out on your own. Learn from the experts and work on real-world challenges that reflect industry requirements.

Visit the Postgraduate Program in Data Science & Analytics by Imarticus Learning

Let your data journey begin—with structure, purpose, and confidence.