Are you looking to learn a new skill that can give you an edge over your competition? If so, then you should consider learning computer vision with Python. This powerful programming language has become increasingly popular in recent years and is perfect for tackling complex computer vision tasks.

This blog post will discuss computer vision and learn it using Python. We will also provide a few resources to get you started!

According to the World Economic Forum, nearly half of all jobs will get replaced by automation within the next 20 years. To stay relevant in this speedily changing world, we must learn new skills that can help us adapt and succeed.

One such skill is computer vision which allows you to teach computers to see as humans do! It’s an excellent process to stand out from the crowd, and you can use it in various industries such as security, manufacturing, healthcare, and more.

What is computer vision?

It is a field of AI that trains machines to understand the content of digital images or videos. You can do it by using algorithms, machine learning techniques, and deep learning networks to identify objects in an image or video frame.

With Python programming language, it’s possible to create programs quickly without having profound knowledge about computer vision algorithms or models.

Tips to get started with computer vision in Python

There are many different ways to get started with computer vision in Python.

OpenCV library:

The OpenCV library is a popular choice for working with computer vision in Python. It provides a wide range of functions that allow you to efficiently perform tasks such as object detection and feature extraction from images or video streams.

Scikit-learn library:

The Scikit-learn library is another popular choice for working with computer vision in Python. It provides a range of algorithms for performing image classification, object detection, and regression analysis tasks.

Keras library:

The Keras library is another popular choice for working with computer vision in Python. It provides a high-level neural networks API, making it easy to build and train deep learning models.

Tensorflow library:

The Tensorflow library is another popular choice for Python computer vision. Python’s high-level programming language provides an API for building and training neural networks.

Matplotlib library:

The Matplotlib library is another popular choice for working with computer vision in Python. It provides a high-level API for creating charts and graphs using the Matplotlib library is another popular choice for working with computer vision in Python.

Discover AIML Course with Imarticus Learning

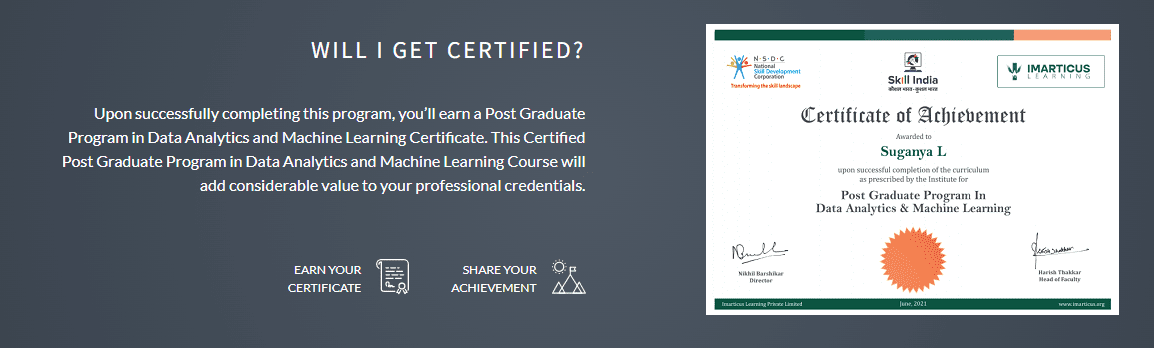

The Artificial Intelligence and Machine Learning certification collaborate with industry professionals to deliver the most satisfactory learning experience for aspiring AIML students.

This intensive Python certification will prepare the student for a data scientist, Data Analyst, Machine Learning Engineer, and AI Engineer.

This intensive Python certification will prepare the student for a data scientist, Data Analyst, Machine Learning Engineer, and AI Engineer.

Course Benefits For Learners:

- This Supervised Learning course will help students improve their Artificial Intelligence basic abilities.

Students can take advantage of our Expert Mentorship program to learn about AIML in a practical setting. - Impress employers and demonstrate their AI talents with a Supervised Learning certification supported by India’s most famous academic collaborations.

- This course will help students gain access to attractive professional prospects in Artificial Intelligence and Machine Learning.

While there is no one-size-fits-all solution, there are various factors to consider. Many factors will determine your performance as a data scientist, including:

While there is no one-size-fits-all solution, there are various factors to consider. Many factors will determine your performance as a data scientist, including: