If you’ve ever worked with numbers, even briefly, you’ve probably used Excel for data analysis. And if you’ve ever thought, I should really be better at this, you’re not alone.

Excel is one of those skills that quietly sits behind countless business decisions. Excel might not have the shine of the newest analytics tools, but don’t be fooled – it’s one of the most powerful, practical, and relevant tools you can learn.

Before data reaches those sleek dashboards, machine learning models, or modern BI platforms, it almost always passes through one place first – Excel.

As the demand for data skills continues to grow, many learners are turning to structured programs like the Data Science & Analytics Course, where Excel is taught from the ground up – not just as a spreadsheet tool, but as a powerful platform for real-world data analysis.

In this guide, I’ll walk you through everything you should know about Excel for Data Analysis, from organising data in Excel to advanced formulas, real-world applications, time-saving tricks, and even how professional training programs can boost your analytics career.

Quick fact:

According to multiple industry surveys, Excel remains one of the top three most-used tools for data analysis and reporting in business environments, especially in finance, operations, and marketing roles.

Why Excel for Data Analysis Still Matters

You might be wondering: in a world of Python, SQL, and business intelligence tools, is Excel still relevant?

The short answer: absolutely.

Excel is universal. Almost every company, big or small, uses Excel in some form, regardless of size or industry. Even when they have data scientists and analytics platforms, data often lands in Excel first to clean, explore, and validate before moving into advanced systems.

In most real-world workflows, datasets don’t jump straight into analytics platforms. They’re first cleaned, checked, explored, and validated in Excel. That’s where initial insights are formed, and early decisions are made.

Here’s why Excel for data analysis continues to dominate:

- It’s widely available and easy to learn, even for non-technical users.

- It supports quick exploratory analysis without setup or coding.

- It integrates with databases, dashboards, and reporting tools.

- It fits into real business workflows – from finance and marketing to operations.

- It works with both simple and advanced analysis without needing programming.

- It allows both simple and advanced analysis in the same environment.

For beginners, Excel builds analytical thinking step by step – from basic summaries to complex models and lets you communicate insights in an intuitive format. You don’t start by making complex dashboards. You start by understanding numbers. Then you begin to spot patterns. And eventually, you learn how to turn those patterns into better decisions. That’s how Excel grows with you.

For professionals, advanced Excel techniques do more than just look impressive – they save time, improve accuracy, and cut down the kind of manual work that slows you down every day.

How to Organise Data in Excel for Analysis

Before formulas or dashboards, data organisation is critical. Poorly structured data leads to incorrect analysis, no matter how advanced your formulas are.

Best Practices to Organise Data

If you’re learning how to organise data in Excel for analysis, follow these rules:

- One column = one variable – Start with a simple rule: one column should represent one variable. Example: Date, Product Name, Revenue, Region should each have their own column.

- No merged cells – Avoid merged cells. Merged cells break formulas, pivot tables, and automation.

- Consistent data types – Dates as dates, numbers as numbers, text as text. Mixing formats may look fine visually, but it causes problems when you apply Excel functions for data analysis.

- Headers in the first row – Clear column headers are essential for analysis.

- No blank rows or columns – Especially in the middle of your dataset.

This structured approach ensures Excel functions for data analysis work correctly and efficiently.

Who Should Learn Excel for Data Analysis?

Excel is not limited to analysts or data specialists. In reality, it’s useful across almost every role.

Excel is valuable across roles:

| Role | How Excel Is Used in Real Life |

| Students and fresh graduates | Learn how to work with data, build basic reports, analyse assignments, and develop problem-solving skills that employers expect from day one. Excel helps bridge the gap between academic knowledge and real-world work. |

| Business analysts | Clean and organise raw data, perform exploratory analysis, create pivot tables, and turn numbers into insights that support business decisions and strategy discussions. |

| Finance and accounting professionals | Manage budgets, track expenses, build financial models, reconcile accounts, and automate recurring reports to improve accuracy and save time. |

| Marketing analysts | Analyse campaign performance, track conversions and ROI, segment customers, and spot trends in customer behaviour using dashboards and data summaries. |

| Operations managers | Monitor KPIs, track inventory and supply chains, analyse process efficiency, and identify bottlenecks to improve day-to-day operations. |

If your role involves numbers, reports, or decisions, Excel skills are essential.

Industry insight:

Job listings for roles like Business Analyst, Financial Analyst, and Operations Analyst frequently list Excel as a mandatory or preferred skill, even when advanced tools like SQL or Python are also mentioned.

Advanced Excel Formulas for Data Analysis

Once you’re comfortable with the basics, it’s time to explore advanced Excel formulas for data analysis.

Examples of Advanced Formulas

- Nested IF statements – for multi-condition logic

- Array formulas – to calculate across dynamic ranges

- Dynamic ranges using OFFSET – for flexible data sets

- SUMPRODUCT – for weighted calculations

- Logical combinations with AND/OR – for decision logic

- Advanced lookups with INDEX + MATCH – more accurate than basic lookups

These formulas allow you to handle scenarios like:

- Multi-condition analysis

- Weighted averages

- Dynamic and scalable reporting

If you’re serious about Excel for data analysis, these formulas are non-negotiable.

Practical reality:

In many organisations, complex business logic is still handled directly in Excel using advanced formulas – long before automation or coding solutions are introduced.

Excel Functions Used for Data Analysis in Real Projects

In real projects, Excel functions are rarely used in isolation. Analysts combine multiple functions to solve practical problems efficiently.

For example, IF, combined with COUNTIF, helps validate data and flag issues early. INDEX, MATCH, and IFERROR together create robust lookup systems that don’t break when data changes. SUMIFS allows you to aggregate data based on multiple conditions – something that comes up constantly in business analysis.

These Excel functions for data analysis help turn raw datasets into decision-ready insights.

Common Function Combinations:

- IF + COUNTIF for data validation and quality checks.

- INDEX + MATCH + IFERROR for robust, error-resistant lookups.

- SUMIFS for aggregating sales or metrics by many multi-criteria conditions.

These combinations of Excel functions for data analysis help transform raw data into decision-ready insights that business leaders can trust.

Excel Tricks for Data Analysis That Save Time

Efficiency matters in analytics. When you work with data regularly, speed matters. The right Excel tricks can significantly improve productivity and accuracy.

Useful Shortcut for Data Analysis in Excel

Simple shortcuts – like converting data into tables, applying filters instantly, or inserting pivot tables quickly – can save hours over time.

- Ctrl + T → Convert data to a table.

- Alt + = → AutoSum

- Ctrl + Shift + L → Filters

- Alt + N + V → Insert Pivot Table

- Ctrl + Arrow Keys → Navigate large datasets

Using the right shortcut for data analysis in Excel can save hours every week.

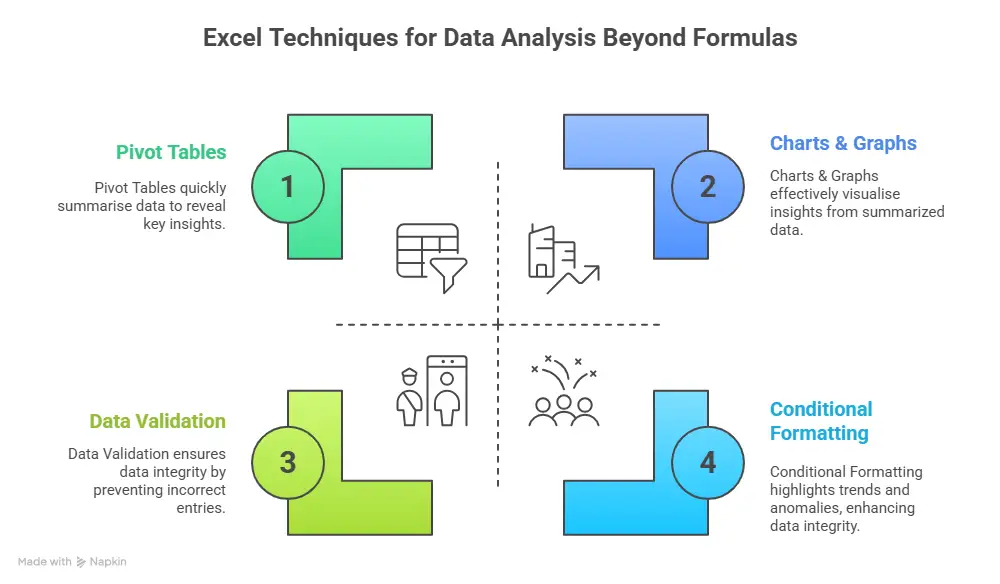

Excel Macros for Data Analysis and Automation

As your work becomes more repetitive, manual effort starts slowing you down. That’s where Excel macros for data analysis become valuable.

Macros allow you to automate repetitive reports, clean and format data automatically, apply formulas and charts consistently, and reduce human errors. While macros require some learning, they significantly enhance Excel techniques for data analysis in large workflows.

For professionals handling recurring reports or large datasets, macros are a powerful addition to Excel techniques for data analysis.

Did you know that even basic Excel automation can reduce repetitive reporting time by 30-50%, especially in roles involving weekly or monthly reports.

Learn Excel for Data Analysis: Free vs Structured Learning

Many beginners search for ways to learn Excel for data analysis for free, and while free resources are useful, they often lack structure.

- Free Learning Resources

- YouTube tutorials

- Excel practice files

- Blogs and forums

- Sample data for Excel analysis

These help you get started, but they usually don’t offer a clear progression path.

Sample Excel Data for Analysis: The Right Way to Practice

The fastest way to learn Excel for data analysis is by working with real-world datasets. Theory alone doesn’t prepare you for messy, incomplete, or inconsistent data – something every analyst deals with daily.

Using sample Excel data for analysis helps you practice cleaning, organising, and interpreting information or visualisation. Sales data, employee records, finance sheets, and marketing performance data are all excellent examples of sample data for Excel analysis.

The more realistic your practice data is, the stronger your Excel problem-solving skills become.

Excel Formulas for Data Analysis: Building Analytical Thinking

Excel formulas for data analysis form the foundation of all analytical work in Excel. Basic formulas like SUM, AVERAGE, COUNT, and IF help you understand what your data is doing and why.

Over time, as you gain confidence, formulas stop feeling technical and start feeling logical. Instead of memorising syntax, you focus on answering questions – totals, trends, comparisons, and exceptions.

This shift is what turns Excel from a calculation tool into a true analysis tool.

Formulas for Data Analysis in Excel: Real-World Applications

Excel formulas aren’t academic – they’re practical tools used daily in business.

| Use Case | How Excel Is Used in Practice |

| Sales performance tracking | Track individual and team sales, compare targets vs actuals, identify top-performing products or regions, and spot trends over time using charts and pivot tables. |

| Budget vs actual analysis | Compare planned budgets with real spending, highlight variances, control costs, and support better financial decision-making through structured reports. |

| Financial forecasting | Analyse historical data to project future revenue, expenses, or demand, helping teams plan resources and set realistic business targets. |

| HR attrition analysis | Monitor employee turnover, identify patterns by department or tenure, and uncover potential retention issues before they impact the business. |

| Marketing campaign evaluation | Measure campaign performance across channels, track conversions and ROI, and understand what’s working (and what isn’t) to optimise future campaigns. |

These real-world applications reinforce why Excel for data analysis remains indispensable.

Excel for Data Analysis vs Other Tools

Excel is often compared with Python, SQL, and BI tools. Each comes with its features and benefits.

Excel stands out for quick analysis, small to medium datasets, ad-hoc reporting, and business-friendly workflows. Rather than replacing other tools, Excel for data analysis often complements them.

For many professionals, Excel is the bridge between raw data and advanced analytics.

| Criteria | Excel | Python | SQL |

| Beginner-friendly & No coding required | ✅ | ❌ | ❌ |

| Quick ad-hoc analysis & Immediate visual feedback | ✅ | ❌ | ❌ |

| Ideal for small to medium datasets | ✅ | ❌ | ❌ |

| Business-friendly interface | ✅ | ❌ | ❌ |

| Fast reporting & presentations | ✅ | ❌ | ❌ |

| Advanced automation & ML | ❌ | ✅ | ❌ |

| Handling very large datasets | ❌ | ✅ | ✅ |

Important Excel Functions for Data Analysis You’ll Use Every Day

Most analysts rely on a handful of functions again and again. These include:

- XLOOKUP – for flexible lookups

- IFERROR – to handle exceptions

- TEXT functions (LEFT, RIGHT, MID) – for text clean-up

- Logical operators – for decision logic

These Excel functions for data analysis make your workflows cleaner, logic stronger, and outputs more reliable.

These Excel functions used for data analysis are not “advanced” because they’re complex – they’re advanced because they solve real business problems efficiently. Once mastered, they dramatically reduce manual effort and mistakes.

Real-world workflow:

In practice, Excel is rarely replaced outright. Instead, it acts as the bridge between raw data sources like SQL databases and advanced analytics or BI dashboards.

Excel for Data Analysis in Real Careers

Excel skills are expected across roles, even when they’re not explicitly mentioned in job descriptions. Finance teams use Excel for budget forecasting and analysis. Marketing teams track campaign performance and ROI. Operations teams rely on Excel to measure efficiency and cost.

Because Excel for data analysis is used everywhere, it offers strong career flexibility. The same skill set applies across industries, functions, and experience levels. This is what makes Excel such a reliable long-term skill.

| Excel Career Roles | Real-World Use of Excel |

| Financial Analyst | Budgeting, forecasting, and financial reporting |

| Business Analyst | Data analysis, pivot tables, and dashboards |

| Marketing Analyst | Campaign tracking, ROI analysis |

| Operations Manager | KPI tracking, cost and efficiency analysis |

| HR Analyst | Attrition and workforce analysis |

| Sales Analyst | Sales tracking and revenue forecasting |

(Source: Naukri, Indeed, LinkedIn)

Career trend:

Many professionals working in analytics roles today began with Excel and later expanded into tools like SQL, Python, and Power BI – making Excel a common entry point into data careers.

Why Learn Excel for Data Analysis with Imarticus Learning

If you’re serious about moving beyond basic spreadsheets and using data the way businesses actually do, structured training can make a real difference. Learning on your own works up to a point – but guidance, practice, and real-world context are what help skills stick.

Programs like the Postgraduate Program in Data Science & Analytics by Imarticus Learning are designed with this in mind. Instead of teaching tools in isolation, the curriculum shows you how Excel fits into the larger analytics workflow. You learn how to move from spreadsheets to databases, code, and dashboards – just like in real roles.

Here’s what you’ll work with:

- Excel for data analysis and reporting.

- SQL for querying and managing data.

- Python for analytics and automation.

- Statistical modelling and machine learning.

- Power BI and Tableau for visualisation.

This project-driven program lets you work with real datasets using Excel, SQL, Python, Tableau, and Power BI – so the skills you build are practical and job-ready. You also get interview preparation and career support to help you apply what you’ve learned with confidence.

Take the next confident step with the Postgraduate Program in Data Science and Analytics, where Excel for data analysis becomes your starting point and a powerful foundation for growing into advanced analytics roles.

FAQs About Excel for Data Analysis

If you’re learning Excel for data analysis, you might still have doubts about formulas, shortcuts, courses, or how Excel fits into real analytics careers. These frequently asked questions guide you, so you can spend less time searching and more time using Excel with confidence.

What are the most important Excel functions for data analysis?

You don’t need to learn every Excel function to be effective. Most people rely on a core set – functions like SUM, AVERAGE, IF, COUNTIF, SUMIF, XLOOKUP, INDEX, MATCH, and IFERROR. These cover the majority of real-world data analysis tasks and form the backbone of everyday Excel work.

What are the 4 types of data analysis?

The four most common types of data analysis are:

- Descriptive – what happened

- Diagnostic – why it happened

- Predictive – what might happen next

- Prescriptive – what should be done

Excel for data analysis is especially strong in descriptive and diagnostic analysis, and often supports predictive analysis through trends and forecasting.

Are there Excel for data analysis books or PDFs?

Yes, there are several Excel for data analysis books and PDFs available that explain formulas, functions, and case studies. These are excellent reference materials, especially when paired with hands-on practice.

Is there an Excel for data analysis free course with a certificate?

Some platforms offer free Excel for data analysis courses with certificates, usually as introductory programs. These help get started, but advanced roles typically require deeper, practical experience beyond certificates. Imarticus Learning teaches Excel for data analysis as part of a broader, industry-aligned analytics curriculum. Opting for such courses is better as they focus beyond formulas, the program emphasises real projects, practical application, and career-ready skills – helping learners move from basic Excel knowledge to confident analytics professionals.

How is Excel used for data analysis?

From calculating totals and comparing performance to creating charts, reports, and pivot tables, Excel helps turn raw data into clear insights. In most workplaces, it’s the first tool people use for analysis before moving to more advanced platforms.

Can Excel handle large datasets for data analysis?

Excel works very well for small to medium-sized datasets and is widely used for exploration, reporting, and validation. For extremely large datasets, tools like SQL or Python may be more efficient, but Excel is often still used to review and present the results.

What Excel skills do employers actually look for?

Employers usually care less about how many formulas you know and a lot more about what you can do with data. Skills like data cleaning, logical formulas, pivot tables, lookups, and clear reporting are highly valued. Being able to explain insights clearly is just as important.

Excel for Data Analysis: Your Next Smart Career Move

Excel may not always be the most talked-about tool, but it’s often the one doing the most work behind the scenes.

Excel for data analysis isn’t just a beginner skill – it’s a career skill that stays relevant as you grow. It helps you move from working with numbers to understanding them, from following reports to driving decisions. Whether you’re analysing sales, tracking performance, or preparing for a larger role in analytics, Excel gives you a solid, reliable foundation.

If you’re at a stage where you don’t just want to learn anymore – but actually want to use what you know in real situations – this is where having structure really matters. With the right guidance, supportive mentors, and hands-on practice, Excel stops feeling like just another tool and starts becoming your gateway into the wider world of data analytics.

That’s exactly where the Postgraduate Program in Data Science and Analytics from Imarticus Learning comes in. Excel is taught as part of a complete, industry-aligned journey, which means you’re not just ticking off concepts or memorising formulas.

Start today, and let Excel become the foundation that supports and grows your analytics career over time.