A data scientist is someone who has a skill set and qualification in interpreting and analyzing complex data that a company deals with. This interpretation and analysis make it easier for the general public to understand it. In a company, the primary function of a data scientist is to interpret data and help the company make its decision in accordance with that data.

Data scientists are intelligent individuals who pick up a huge mass of complex or messy data and apply their mathematical, statistical and programming skills to organize, interpret and analyze it to make it understandable for the general people.

A data scientist is a profession which is needed a lot by companies. In this era, where technology is everything, companies need the data to be simplified to understand it and make crucial decisions accordingly.

In this article, we will talk about how data scientists tend to publish their work. Keep reading and find out!

Share through a beautifully written blog!

Data scientists can be working in an academic field or a company. Inside an industry, the data scientist shares his work through an internal network limited to the employees of the company or the ones to which it the data concerns. In a company, where the main purpose of a data scientist is to recommend a change in decision making in accordance with the data, the task of a data scientist working in an academic field is entirely opposite.

The research and research paper does not stay limited to a section of people, but it is available to the general public. Data scientists usually present their work through blogs. Data is something which might not be interesting to all; hence, they try to make the blog as exciting as it can be for the general people to understand.

Social media is a platform for almost everything

The data might be shared through public repositories and other social platforms such as Google, Facebook, Twitter etc. The research of learned and experienced data scientist can be looked upon by the people who aim at getting the data science training.

A research paper shared by a data scientist consists of a lot of complex data converted into organized beauty! New scientists can get an idea by these research papers from experienced data scientist about how the data needs to be sorted.

Emails have a lot of conveniences!

Last but not least, data scientists also tend to share their work and research papers through emails. If a company does not have an internal network, emails serve the need. If a data scientist is working as a freelancer for a company or individual after completing Genpact data science courses in India, he will have to use the email services to share the work with concerned people.

Working as a freelancer is something which a data scientist can easily do. They generally prefer working from home because most of the work they do is typically done on computers. Big companies who employ data scientist permit the convenience of working from home to the data scientists.

Takeaway:

The sharing of the research data or research papers depends wholly on the data scientist. If working for a company, the company may not allow the scientist to share the data publicly but internally. If you are thinking to make this well-paid post as your profession, there are a number of Genpact data science courses in India after taking which, you can get the data science pro degree which makes you eligible to be hired by a company dealing with complex data.

Category: Analytics

Become Data Scientist in 90 Days

Data science is similar to any other field of science. The scientists involved conducted their own research and based on the information available form hypothesis and theories. However, in the case of data science, these hypotheses are created based on the data made available to the concerned scientists. The primary factor which an individual must consider in order to become a data scientist within a span of 90 days is to understand and to have a knack for analyzing data.

A career in data science is a hot topic in the present market. Organizations all around the globe are relying on big data, and for that skilled data, a scientist is required. Analysis of collected data involves the visualization of the data which is then backed up by creating reports after identifying specific patterns. However, what sets Data Science apart from the more traditional business analysis is the use of complex algorithms. The advanced algorithms such as neural networks, machine learning algorithms, and regression algorithms are used to scan the available data in order to identify the meaning and the purpose of the numbers and codes.

To become a data scientist an individual must have adequate knowledge about the fundamentals and the framework of these algorithms. This can only be possible when the concerned individual has a tremendous foundation for mathematics and statistics. So if you are aspiring to be a data scientist, make sure to get the basics right by keeping track of your mathematics as well as statistic skills.

Another foremost fundamental of data science is to know and understand the purpose of this study. The sole objective of a data scientist is to answer various questions. The study of data is carried out so that the probable questions can be answered by going through and analyzing a large set of recorded data. Let us consider the example of the popular entertainment network Netflix. In 2017, Netflix put forth a petition where a million dollars would be paid to a data scientist who would successfully improve the suggestion algorithm of the network.

Such is the demand and the requirement of the data scientist in the current market. Now for beginners, it is essential not to get into complex codes and a large amount of data. Analysis of large data would automatically mean the use of multiple algorithms. In order to become an efficient data scientist within a span of 90 days, it is critical to know personal strengths and weaknesses. Taking small steps helps as it builds confidence as well as enhances skill gradually. By considering these subtle factors, an individual can learn data science in no time and become proficient at it.

Another essential factor of becoming a data scientist is to go beyond the learning of Hadoop. There are many data science courses which not only helps you to be efficient with Hadoop but also assists you to gain real knowledge about reading and understand the various algorithms which are part of this data science game.

So to conclude, data science is a field which requires knowledge from all domains. A combination of mathematics, statistics, and algorithms give rise to data science. The job of a data scientist is not only to create a hypothesis, but also to find data which proves the formulated hypothesis to be correct. Thus, all these elements make the study of data science unique and challenging to master. However, with the right guidance made available through data scientist courses, an aspiring individual can surely reach the pinnacle of the data science industry.

What Are Some of The Advantages and Disadvantages of Embedded Analytics For A Business?

What is embedded analytics?

Embedded analytics basically empowers the transaction process system (TPS) or the data framework. This helps to investigative administrations without being subject to any outside or outsider diagnostic application or framework. It helps activities/IT chiefs incomprehension, overseeing and enhancing the execution of the framework. There are several uses of embedded analytics such as data visualization, preparing interactive reports, mobile business intelligence and user engagement etc.

To get a proper understanding and use of this, there are a lot of business analyst courses, which would be very beneficial for you. There are a lot of courses present in the market, so make sure you make a smart choice. You must choose the course that aligns best with your interest. Everything has its pros and cons, and likewise, there are certain advantages and disadvantages of using this in a business setting.

Advantages of embedded analytics are:

1) Value and time

With the use of embedded analytics, you can enhance the business applications used by the customers. Whether you are a wholesaler, retailer, businessman or anyone with a lot of data. It is very difficult to analyze so much data in less time. Examining data holds a lot of value in a business. If the data is analyzed, it is easy to compare and form your strategy for the future. Analyzed data can help you make better decisions and can even help you make business forecasts. With embedded analytics, you can analyze data faster and make speedy decisions.

2) Great for engaging customer

By adding meaningful analytics inside customer portal, you can give customers more personalized user experience. This will help you to increase loyalty and retention and creating new revenue opportunities as well. Netflix is one company that has been quite successful in integrating embedded analytics for business. The research of Netflix claims that the customer churn has been decreased by a considerable amount making 1 billion dollars a year from customer retention

3) Better business choices

Embedded analytics helps users to get access to information which can help them to make business choices according to the changing environment. By using this, your employees can respond to dangers or threats faster than usual. This will assist the business a lot in making smart decisions. By using embedded analytics, it gives the option to the employees to rapidly make charts so that they can examine business performance whenever they want.

Disadvantages of embedded analytics are:

1) Not easy to use

Not all people are tech-savvy, and for some of them, the process can be very difficult to follow. Not all people can go through the trouble of learning this all by themselves as it can be very confusing and complex. We suggest that you should take up some courses in order to learn this like a business analytics course. There are several advantages of business analytics courses which would bring great value to you and your firm.

Conclusion:

Embedded analytics enhances the client experience while expanding the end-client selection and developing income. Not at all like business intelligence programs, embedded analytics helps the user to engage in site for a longer time and analyses data to facilitate quick decision making. You should definitely give this a try, and if you want to learn this, there are a lot of ways you can do it. There are many books, courses and online platforms that can help you. We suggest that you should always go for a course as it is the most effective way to learn this.

The Next Big Thing in Data Analytics

Data analytics is fast evolving, and with the increasing use of streaming data, machine data and big data only adds to the continuous challenges encountered during analyzing log data, enterprise application data, web information, historical data stored in documents and reports etc.

In the present day, data analyst struggle to provide a solution for business and client request. As it is, there is a substantial deficient of talent in the field of business data analysts and data scientist, with businesses continue to struggle with data reconciliation, data blending, data access, development of data analytics tools and data mining techniques.

Data analyst and data scientist are frequently unable to discover data and information required and are often unaware of the latest data analytics tools such as the self-service data prep tools assist in the improvement of productivity. Furthermore, the continuous development of advanced social technologies and with the incorporation of various social features have caused an increased expectation regarding timeliness and information availability. Similarly, users have similar enhanced expectations towards business information irrespective of where the data originates or how is it formatted. There is an increasing demand for instant access for data and the ease of sharing it with essential stakeholders.

Data socialization is the metamorphosis of data mining techniques to enhance data accessibility across companies, teams, and individuals. Data socialization is changing how business think about business data and how employees interface with business data.

Data socialization comprise of management of data platform which enables the linkage between self-service visual data preparation, automation, cataloging, data discovery and governance features with essential features common to a various social media platform. Hereby, it provides businesses with the ability to leverage social media metrics such as user ratings, discussions, recommendations, comments etc. to enable usage of data for improved decision making.

What is Data Socialisation?

It is a data analytic tool which enables business analyst, data scientist and various relevant users throughout an organization to search, reuse, and share managed data. It aids in the achievement of agility and enterprise collaboration. Data socialization allows employees to find and utilize data which is accessible to them within a specified data ecosystem and assist in the creation of a social network of raw data sets which are curated and certified. These data ecosystems have various levels of controls, restrictions, and limitations which can be well defined for each individual person in an organization. These data mining techniques aid the strengthening an environment of data access, wherein analyst and users are allowed to learn from one another, enhance productivity and be well-connected as its sources, cleans and prepares of data analytics.

Some Characteristics of Data Socialisation

Some of the critical characteristics of data socialization include:

- The ability of understanding data with regards to its relevance about how a particular data is deemed to be used by various users within an enterprise.

- Involvement of collaboration of essential users with the data set to harness knowledge which often remains unshared.

- It enables enterprise users to search for data which has been cataloged, prepare data models, and index metadata by users, type, application, and various unique parameters.

- Data Socialisation enables to perform a data quality score, suggest for relevant data sources, automatically recommend actions for preparing actions designed according to user persona.

With various business applications incorporating features of social media functions towards improvement in business collaboration, at this moment making individuals and companies well informed, productive and agile.

Data socialization aids in delivering various benefits to various data analytics tools and removal of obstacles towards accessing and sharing data, at this moment allowing data scientist, business users and business information analyst in improving their productivity and decision-making. It further empowers analyst, data scientist and other business users across various departments to collaborate using the available data. By providing the right person with the correct data required to make informed, educated and timely decisions, the implementation of Data socialization is deemed to be the next big thing in data analytics.

Join Big Data Analytics Course from Imarticus Learning to start your career in data analytics.

The future of the Global Fermentation Machine Industry

Overview

Fermentation is widely used in the Pharmaceutical and Beverages Industry. It is one of the most common processes in everyday manufacturing. Since ages, the fermentation industry has undergone several changes, each progressive, capable of much more load and more efficient. In the future, don’t be surprised if the fermentation machines become smart and intelligent, capable of self-assessment and direction!

As for the fermentation machine market, it is evergreen and always in demand and an all-time niche product. Though it is a niche, the demand for fermented products given the preference for probiotic food for health reasons nowadays ensures that the industry is always in vogue!

According to top business analysts, the future of the global fermentation machine industry is bright.

Business Analysis

Several business analysts and business analysis companies have undertaken detailed studies of how the fermentation machine market will be impacted. It’s segmentation, growth, shares and future predictions in the forecast period 2018 to 2025 have been analyzed and reported.

Companies that are well versed with Business Analyst Course, such as Orion Research, Ernst and Young etc. have released reports on the forecast for fermentation market. According to one such business analyst expert report, global fermentation machinery and technology market is approximately valued at USD 1,573.15 million in 2018 and is widely expected to generate a revenue of around USD 2,244.20 million by the end of 2025, growing at a CAG rate of around 6.10% between 2018 and 2025, which is quite significant.

The scope of the industry

Of much importance is also the safety measures and regulations as food and pharmaceuticals are especially sensitive and highly people prioritized. The fermentation machine market is ripe across all regions: North America, Latin America, Europe, Asia Pacific and the Middle East and Africa.

Key players are big companies, as well as the small ones viz., Zenith Forgings, Hengel, Mauting s.r.o, JUMANOIX, S.L., and Nikko Co. Ltd.

The industry can be segmented based on the type of machinery: Semi-Automatic and Fully Automatic, out of which, as the name suggests, fully automatic ones are more advanced. Based on the application area, fermentation machinery is classified into commercial, industrial and other applications. Industrial application machines are generally heavy duty, and the aim of commercial machinery is to minimize errors. This apart, fermentation machinery is also segmented based on the region of production: United States, Europe, Japan, and China.

Size of the fermentation market

The fermentation machine market is said to impact many countries across all regions and continents, as it always has, opine business analysis experts.

Countries such as the United States, China, India etc. have been at the forefront of the market since they are large producers as well as consumers. Organizations such as BRICS, the European Union etc. have taken cognizance of the fact that it is an industry that contributes significantly to the countries’ Gross Domestic Product (GDP) and the world economy.

Forecast 2025: Into the future

Though the fermentation machine industry has always been around and evolving, the need for sustainable products that are not heavy on the ecosystem push for novel methods and technologies. Apart from this, as technologies are becoming self-driven, smartly controlled and cloud operated, it becomes important that the fermentation industry check for feasibility and if these changes are going to make it more efficient and minimize risk. It will take a while for trial and error and implementation. Security measures need to be put in place too.

By the year 2025, the fermentation machine industry is expected to grow exponentially and garner much bigger revenues, and it is predicted by business analysts that the market will only grow, as the demand is almost always present.

How is Machine Learning Impacting The Education Industry?

Machines today are being used more than ever due to the simplicity of their making and their ability to learn and create value to organizations. The story is not different in the education space either. Artificial intelligence and machine learning are being used to create modules for students, which are highly personalized and intuitive. One of the biggest benefits of using machine learning is because of the computers ability to process large volumes of data both historic and real-time and analyze it for predictive outcomes. Artificial Intelligence is already being used to grade papers (multiple choice questions) fairly and effectively in many schools across the world. It is also impacting the lives of specially-abled students by providing tools and equipment to study and succeed.

The education industry is moving beyond classroom and textbook learning to create more immersive programs for their students. Digital libraries are growing at a rapid pace due to emerging technologies such as big data, cloud computing, and AI. Another great example of machine learning usage is to categorize content in a manner where the student finds it easy to build on existing knowledge. This means that it gives a student the opportunity to learn at his/her own pace and succeed while doing so, thereby greatly boosting the morale of the child.

Here are a few ways machine learning has become a game changer in the education space.

Supporting Teachers

Machine learning helps teachers program a curriculum which is highly individualized for their students. Kids today are smart and fast learners due to their increased exposure to technology. Hence the subjects also need to be contemporary and relevant. All their students’ data in terms of marks, activities can be historically analyzed to create personalized lessons, thereby matching the child’s ability to learn and succeed.

Custom-Made Subjects

One of the biggest advantages of machine learning training is a personalized learning experience for individuals. Every child learns at a different rate and is proficient in different subjects. Once data is gathered on the child’s different abilities, a machine can analyze and build a program specific to the child’s needs thereby controlling the outcome as well as the rate of learning. Another aspect of this is it will help grade students fairly and as per their ability without any human bias.

Increase Retention

Through Machine Learning teachers can identify students who are likely to forget and help them with specialized chapters and techniques to retain the subject. Learning analytics tools such as Wooclap, Yet Analytics, BrightBytes provides precise predictive solutions through different learning ecosystems. This helps educators adapt and improve their content significantly mapping it according to the students’ needs.

Conclusion

Since we are discovering more ways machines can be used effectively in classrooms, one can predict that the growth trajectory and successful integration of machines are highly possible soon.

15 Terms Everyone in the R Programming Industry Should Know

Of late, the R language has gained popularity in the technology circles. R language is counted among the open source program, which is maintained by R –Core development team. This team comprises of developers all across the world who work voluntarily.

This language is used to carry out many statistical operations, while it is a common line driven program. It was developed by John Chambers and his team at Bell Labs in the US for implementing S programming language. There are several benefits of using this language, which give people from different industries a reason to adopt it. It is among the best machine learning and data analysis language.

People making a career in the domain of data analytics course can find good R programming opportunities. If you are new in this field and want to learn and master, have a look at the list of 15 Terms everyone in the R Programming Industry Should Know, have a look:

1). Mean in R – The mean in R is the average of the total numbers, which are calculated with the central value of a set of numbers. For calculating this number, you simply have to add all the numbers together and then divide by the available numbers found there.

2). The compiler in R– It is something that helps in transforming the computer code, which is written in one programming language (to be precise the source language) into the other compiler language, which is the target language.

3). Median in R – It is a center of the sorted out list of numbers, however, if the numbers of even, things are different to some extent. In the case of the R language, first, you have to find out the middle pair of numbers followed by finding out the value of the midway number. The numbers are added and then divided by two to get the same.

4). Variance in R – It is basically the average of squared difference that is found from the Mean.

5). A polynomial in R – If you break this terminology you will get the meaning. Poly is many and nominal is a term, which means many terms.

6). Element Recycling – The vectors of diverse lengths when coming together in any operation then shorter vector elements are reused for completing the operation. This is known as element recycling.

7). Factor variable – These are categorical variables, which hold the string or numeric values. These are used in different kinds of graphics and particularly for statistical modeling wherein numerous degrees of freedom is allocated.

8). Data frame in R – These have diverse inputs in the form of integers, characters, etc.

9). The matrix in R – These have homogenous data types that are stored including similar kinds of integers and characters.

10). Function in R – Most of the functions in this language are the functions of functions. The objects in function fall under the local to a function, while these are returned to any kind of data type.

11). Attribute function in R – This function has an attribute of carrying out two different functions together. These include both the object and the attribute’s name.

12). The length function in R – This is the function that helps in getting or setting the right length of the vector/object.

13). Data Structure in R – It is a special kind of format that helps in storing and organizing data. These include file, array, and record found in the table and tree.

14). File in R – It is a file extension for any script written in R language, which is designed for graphical and statistical purposes.

15). Arbitrary function in R – It is any function in a program; however, it is often referred usually to the same category of function that people deal with it.

Conclusion

There are many more things to learn and know about the R Language before you think about the R programming opportunities. The above is the modest list of terms found in R Language.

Machine Learning Tech Can Enhance Wildfire Modelling

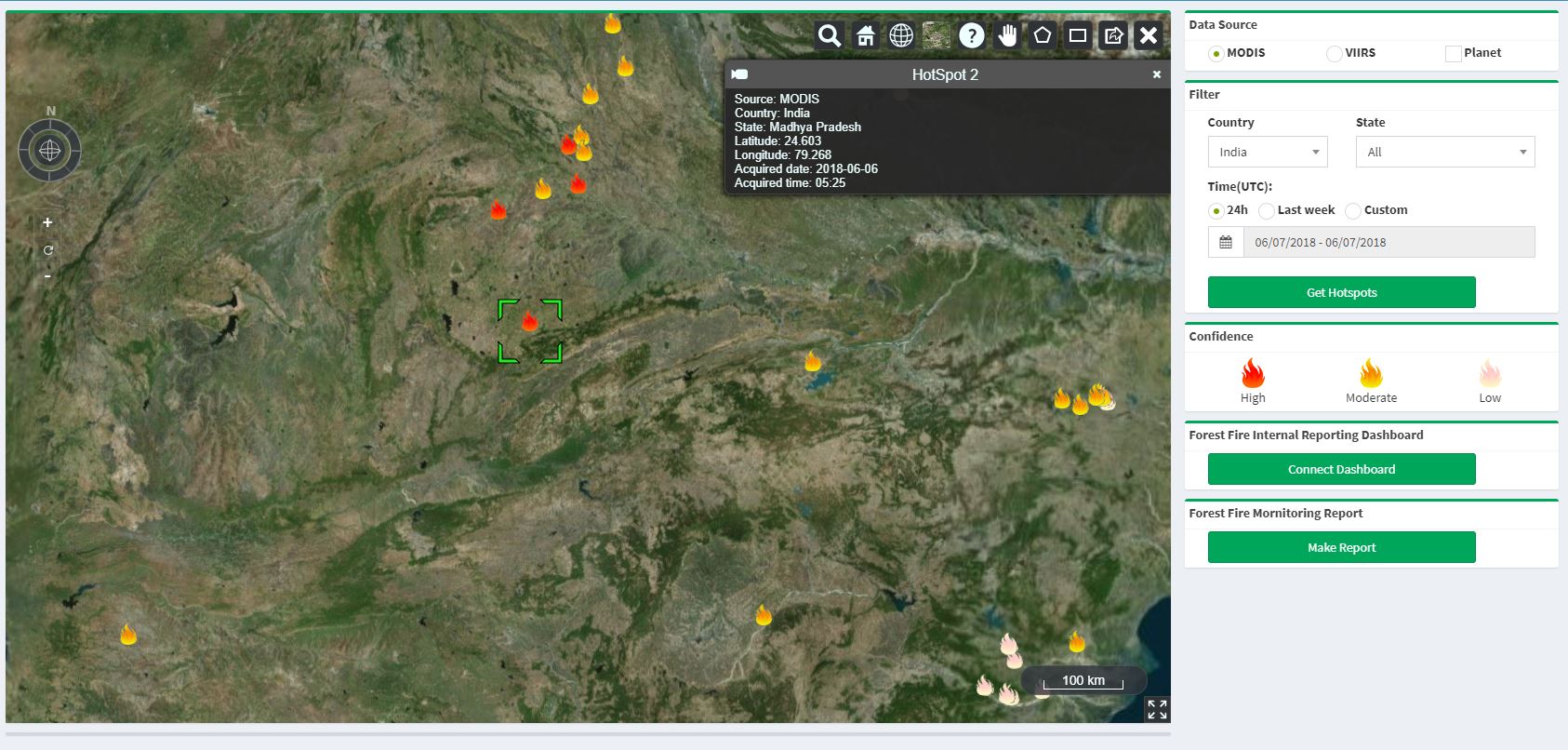

Firefighting is expensive and machine learning tools are helping in analyses of forest fires to predict and prevent future disasters, here is everything you need to know on Machine Learning.

Every year destructive wildfire destroys many forests across the globe. With climate change and global warming, there is a growing concern amongst scientists and world leaders regarding how to combat natural calamities. In the U.S. alone millions of dollars are poured into disaster management and rehabilitation.

There is significant research being conducted in the space of wildfire disaster management and one of the biggest investments in technology is towards artificial intelligence and machine learning. Risk modelers such as Egecat, RMS, AIR is not developing fully fledged versions of the probable places which have a high vulnerability to wildfire and what factors influence the activity. Several factors such as climate change, weather conditions, and region create a conducive environment for a forest fire to break out.

These can be assessed by artificial intelligence tools. Machines are inherently well-versed when it comes to picking up information quickly and this is known as machine learning. It can analyze a richer dataset than traditional forecasting systems, thereby helping researchers make informed decisions quickly. Once a high-risk scenario is detected, drones can be commissioned to ensuing fires. This leads to effective utilization of resources such as firefighters, water and medication thereby helping the government protect their citizens.

Due to this rapid growth in ability, machine learning can help in urban planning and revolutionize disaster management and resource planning.

Here are the top ways a machine learning course is helping governments and organizations combat wildfire.

Aiding Rescue

One of the most important things when it comes to any natural disaster is rescue and rehabilitation. Time is of the essence during this crucial time. Finding survivors by using artificial intelligence tools which skim through social media data is a key development. Another component in machine learning is the ability to process historical data and deliver better disaster response management abilities i.e. using the limited resources in the best way possible.

Predictability of Wildfires

Machines can analyze vast amounts of historical and real-time data to get an understanding of the likely places where wildfires will hit. There are also able to determine the factors that influence the magnitude of the fire. These possible predictions can help researchers prepare ahead of time and help mitigate the damage.

Insurance Risk Assessment

There is a massive potential for machine learning to grow in the insurance industry when it comes to assessment and allocation. Real-time data processed by machines can be used in complement with prediction tools to help understand the risks and allocate resources better, thereby cutting down on the losses. Insurers can align their interest in disaster resilience, safety and urban development in partnership with the government due to machine learning.

Conclusion

When forest fires are detected early using machine learning, it can help firefighters deal with blazes, help in recovery and prevention.

Linear Regression and Its Applications in Machine Learning!

Machine Learning needs to be supervised for the computers to effectively and efficiently utilise their time and efforts. One of the top ways to do it is through linear regression and here’s how.

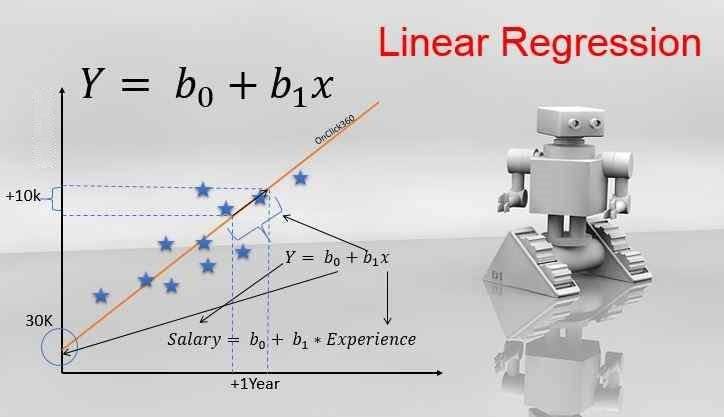

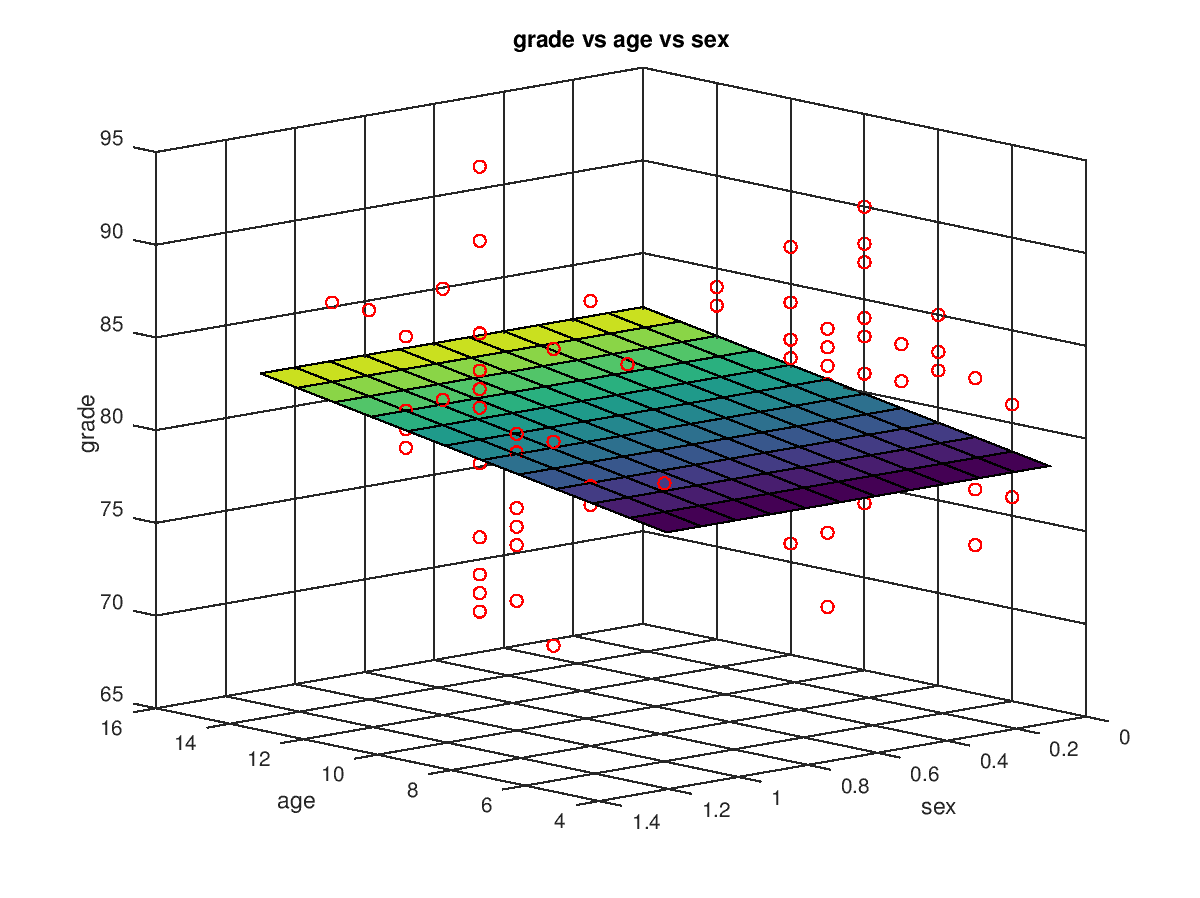

Even the most deligent managers can make mistakes in organisations. But today, we live in a world where automation powers most industries, thereby reducing cost, increasing efficiency, and eliminating human error. The rising application of machine learning and artificial intelligence dominates this. So, what gives machines the ability to learn and understand large volumes of data? It is through the learning methodologies such as linear regression with the help of a dedicated data science course.

So, what is linear regression? Simply put, machines must be supervised to effectively learn new things. Linear regression is a machine learning algorithm that enables this. Machines’ biggest ability is learning about problems and executing solutions seamlessly. This greatly reduces and eliminates human error.

It is also used to find the relationship between forecasting and variables. A task is performed based on a dependable variable by analyzing the impact of an independent variable on it. Those proficient in programming software such as Python, C can sci-kit learn the library to import the linear regression model or create their custom algorithm before applying it to the machines. This means that it is highly customisable and easy to learn. Organizations worldwide are heavily investing in linear regression training for their employees to prepare the workforce for the future.

The top benefits of linear regression in machine learning are as follows.

Forecasting

A top advantage of using a linear regression model in machine learning is the ability to forecast trends and make feasible predictions. Data scientists can use these predictions and make further deductions based on machine learning. It is quick, efficient, and accurate. This is predominantly since machines process large volumes of data and there is minimum human intervention. Once the algorithm is established, the process of learning becomes simplified.

Beneficial to small businesses

By altering one or two variables, machines can understand the impact on sales. Since deploying linear regression is cost-effective, it is greatly advantageous to small businesses since short- and long-term forecasts can be made for sales. Small businesses can plan their resources well and create a growth trajectory. They will also understand the market and its preferences and learn about supply and demand.

Preparing Strategies

Since machine learning enables prediction, one of the biggest advantages of a linear regression model is the ability to prepare a strategy for a given situation well in advance and analyse various outcomes. Meaningful information can be derived from the forecasting regression model, helping companies plan strategically and make executive decisions.

Conclusion

Linear regression is one of the most common machine learning processes in the world and it helps prepare businesses in a volatile and dynamic environment. At Imarticus Learning we have a dedicated data science course for all the aspiring data scientists, data analysts like you.

Frequently Asked Questions

Why should I go for a data science course?

The field of data science has the potential to enhance our lifestyle and professional endeavours, empowering individuals to make more informed decisions, tackle complex problems, uncover innovative breakthroughs, and confront some of society’s most critical challenges. A career in data science positions you as an active contributor to this transformative journey, where your skills can play a pivotal role in shaping a better future.

What is a data science course in general?

Data science encompasses studying and analysing extensive datasets through contemporary tools and methodologies, aiming to unveil concealed patterns, extract meaningful insights, and facilitate informed business decision-making. Intricate machine learning algorithms are leveraged to construct predictive models within this domain, showcasing the dynamic intersection of data exploration and advanced computational techniques.

What is the salary in a data science course?

In India, the salary for Data Scientists spans from ₹3.9 Lakhs to ₹27.9 Lakhs, with an average annual income of ₹14.3 Lakhs. These salary estimates are derived from the latest data, considering inputs from 38.9k individuals working in Data Science.

The Ultimate Glossary of Terms About Machine Learning

Machine learning is an artificial intelligence application which provides computer systems with the ability to learn on its own and improve with experience without any explicit requirement of additional programming. Machine learning has its focus on developing computer programs whole can access data and utilize the data to learn on its own.

Some of the commonly used terminology used in Machine Learning are as follows:

- Adam Optimisation

It is an algorithm utilized to train models of deep learning and is an extension of the Stochastic Gradient Descent. In this algorithm, the average is run employing both gradients and using the gradient’s second moments. It is useful for computing the rate of adaptive learning for every parameter.

- Bootstrapping

It is a form of the sequential process wherein each subsequent model tries to correct the errors in the earlier models. Each model is dependent on its previous model.

- Clustering

It is a form of unsupervised learning utilized for discovering inherent groupings within a set of data. For instance, a grouping of consumers on the basis of their buying behavior which can be further used to segment the customers. It provides useful data which the companies can exploit to generate more revenues and profits.

- Dashboard

It is an informative tool which aids in the visual tracking, analysis of data by displaying key indicators, metrics and data points on a single screen in an organized manner. Dashboards are often customizable and can be altered based upon the preference of the user or according to the requirement so of a project.

- Deep Learning

It is a form of a Machine Learning algorithm which utilized the concepts of the human brain towards facilitation of the modeling of arbitrary functions. It requires a large volume of data, and the flexibility of this algorithm enables multiple outputs of different models at the same time.

- Early Stopping

It is a technique of avoiding overfitting while training an ML model using iterative methods. Early stoppings are set in such a manner that it halts the performance of improvement on validation sets.

- Goodness of Fit

It is a model which explains a proper fitment with a set of observations. Its measurements can be summarised into the discrepancies between its observed values with that of the expected values using a certain model.

This Machine Learning Course is a good fir when the errors on the models which are on training data along with the minimum test data. With time, this algorithm learns the errors in a model and corrects the same.

- Iteration

It is the number of times the parameters of an algorithm is updated during training of a dataset on a model.

- Market Basket Analysis

It is a popular technique utilized by marketers for identification of the best combination of services and products which are frequently purchased by consumers. It is also known as product association analysis.

- MIS

Also known as Management Information System, it is a computerized system comprising of software and hardware which serve as the heart of a corporation’s operations. It compiles data from various online and integrated systems, conducts an analysis on the gathered data, and generates reports which enable the management to make informed and educated business decisions.

- One Shot Learning

This form of machine learning trains the model which a single example. These are generally utilized for product classification.

- Pattern Recognition

It is a form of machine learning which focuses on recognizing regularities and patterns in data. Some examples of pattern recognition used in many daily applications include face detection, optical character recognition, object detection, facial recognition, classification of objects etc.

- Range

It is the difference between the lowest and the highest value in a data set.