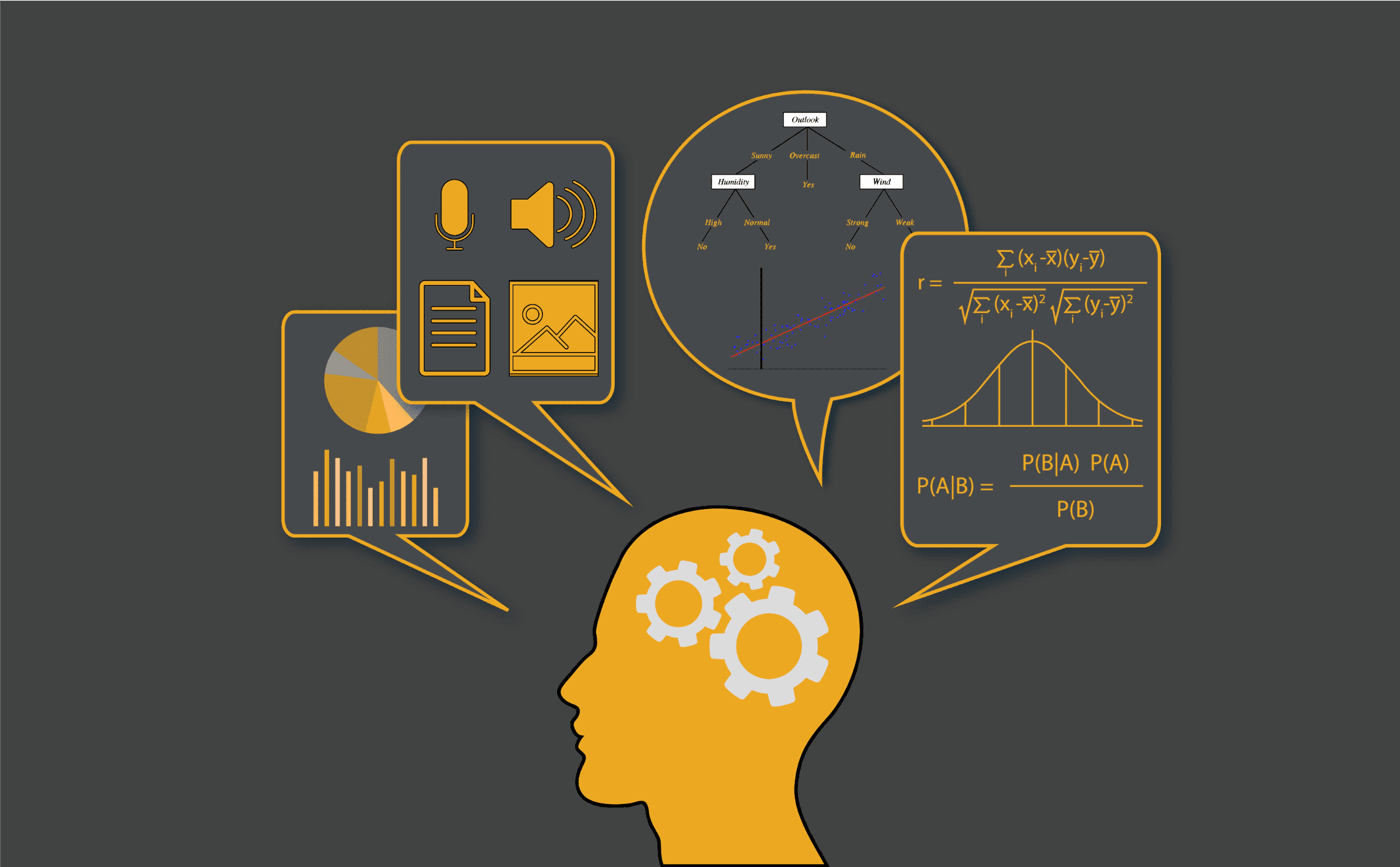

The last decade saw the introduction of Machine Learning Training, Deep-Learning and Neural networks in AI to acquire the capacity to reach computational levels and mimic human intelligence.

The future scope of Machine Learning appears bright with ML enabled AI being irreplaceable and a composite part of evolving technologies in all verticals, industries, production means, robotics, laser uses, self-driven cars and smart mobile devices that have become a part of our lives. It thus makes perfect sense to learn Machine Learning and make a well-paying career in the field. Since the early 50’s a lot of research has gone into making these developments possible, and the trend for continued research into AI has made it the most promising technology of the future.

Why study AI:

AI rules and has become a reality in our lives in so many different ways. From our smartphones and assistants like Siri, Google, Alexa etc, the video games and Google searches we do, self-driven cars, smart traffic lights, automatic parking, robotic production arms, medical aids and devices like the CAT scans and MRI, G-mail and so many more are all AI-enabled data-driven applications, that one sees across verticals and without which our lives would not be so comfortable. Fields like self-learning, ML algorithm creation, data storage in clouds, smart neural networking, and predictive analysis from data analytics are symbiotic. Let us look at how one can get AI skills.

Getting started with AI and ML learning:

To start AI learning the web offers DIY tutorials and resources for beginners and those who wish to do free courses. However, there is a limit to technical knowledge learned in such ‘learn machine learning’ modules, as most of these need hours of practice to get adept and fluent in. So, the best route appears to be in doing a paid classroom Machine Learning Course.

Here’s a simple tutorial to study ML and AI.

1. Select a research topic that interests you:

Do brush through the online tutorials on the topic on the internet. Apply this to small solutions as you practice your learning. If you do not understand the topic well enough use Kaggle the community forum to post your issues and continue learning from the community too. Just stay motivated, focused and dedicated while learning.

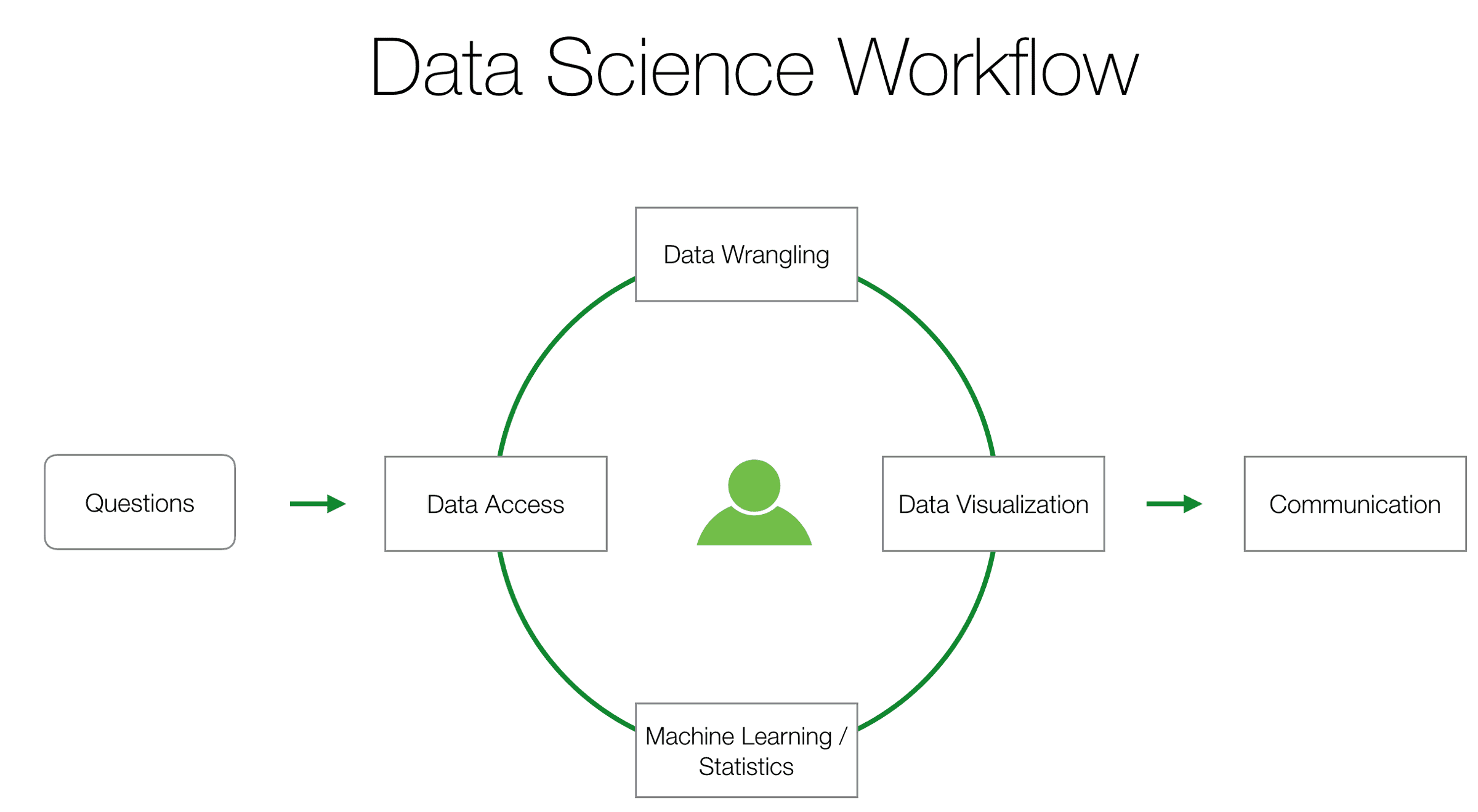

2. Look for similar algorithm solutions:

The process of your solution would essentially be to find a fast solution and it helps when you have a similar algorithm. You will need to tweak its performance, make the data trainable for the ML algorithm selected, train the model, check the outcomes, retest and retrain where and when required by evaluating the performance of the solution. Then test and research its capabilities to be true, accurate and produce the best results or outcomes.

3. Use all resources to better the solution:

Use all resources like data cleaning, simple algorithms, testing practices, and creative data analytics to enhance your solution. Often data cleaning and formatting will produce better results than self-taught algorithms for deep learning in a self-taught solution. The idea is to keep it simple and increase ROI.

4. Share and tweak your unique solution:

Feedback and testing in real-time in a community can help you further enhance the solution while offering you some advice on what is wrong and the mentorship to get it right.

5. Continue the process with different issues and solutions:

Make every task step problem you encounter an issue for a unique solution. Keep adding such small solutions to your portfolio and sharing it on Kaggle. You need to study how to translate outcomes and abstract concepts into tiny segmented problems with solutions to get ahead and find ML solutions in AI.

6. Participate in hackathons and Kaggle events:

Such exercises are not for winning but testing your solution-skills using different cross-functional approaches and will also hone your team-performance skills. Practice your collaborative, communicative and contributory skills.

7. Practice and make use of ML in your profession:

Identify your career aims and never miss an opportunity to enroll for classroom sessions, webinars, internships, community learning, etc.

Concluding notes:

AI is a combination of topics and research opportunities abound when you learn to use your knowledge professionally. Thus the future scope of Machine Learning which underlies AI contains newer adaptations which will emerge. With more data and emerging technological changes, the field of AI offers tremendous developmental scope and employability in research and application fields to millions of career aspirants.

Do a machine learning training at Imarticus Learning to help with improving your ML practical skills, enhance your resume and portfolio and get a highly-paid career with assured placements. Why wait?