Last updated on June 28th, 2024 at 07:14 am

Exploratory data analysis (EDA) is an essential component of today’s data-driven decision-making. Data analysis involves handling and analysing data to find important trends and insights that might boost corporate success.

With the growing importance of data in today’s world, mastering these techniques through a data analytics course or a data scientist course can lead to exciting career opportunities and the ability to make data-driven decisions that positively impact businesses.

Whether you’re a seasoned data expert or just starting your journey, learning EDA can empower you to extract meaningful information from data and drive better outcomes for organisations.

Role of Data Analysis in Data Science and Business Decislpion Making

Effective business decision-making requires careful consideration of various factors, and data-driven decision-making is a powerful approach that relies on past data insights. Using data from business operations enables accurate and informed choices, improving company performance.

Data lies at the core of business operations, providing valuable insights to drive growth and address financial, sales, marketing, and customer service challenges. To harness its full potential, understanding critical data metrics is essential for measuring and using data effectively in shaping future strategies.

Businesses can achieve success more quickly and reach new heights by implementing data-driven decision-making.

Understanding Exploratory Data Analysis (EDA)

EDA is a vital tool for data scientists. It involves analysing and visualising datasets to identify patterns, anomalies, and relationships among variables. EDA helps understand data characteristics, detect errors, and validate assumptions.

EDA is a fundamental skill for those pursuing a career in data science. Through comprehensive data science training, individuals learn to use EDA effectively, ensuring accurate analyses and supporting decision-making.

EDA’s insights are invaluable for addressing business objectives and guiding stakeholders to ask relevant questions. It provides answers about standard deviations, categorical variables, and confidence intervals.

After completing EDA, data scientists can apply their findings to advanced analyses, including machine learning. EDA lays the foundation for data science training and impactful data-driven solutions.

Exploring Data Distribution and Summary Statistics

In data analytics courses, you’ll learn about data distribution analysis, which involves examining the distribution of individual variables in a dataset. Techniques like histograms, kernel density estimation (KDE), and probability density plots help visualise data shape and value frequencies.

Additionally, summary statistics such as mean, median, standard deviation, quartiles, and percentiles offer a quick snapshot of central tendencies and data spread.

Data Visualisation Techniques

Data visualisation techniques involve diverse graphical methods for presenting and analysing data. Common types include scatter plots, bar charts, line charts, box plots, heat maps, and pair plots.

These visualisations aid researchers and analysts in gaining insights and patterns, improving decision-making and understanding complex datasets.

Identifying Data Patterns and Relationships

Correlation analysis: Correlation analysis helps identify the degree of association between two continuous variables. It is often represented using correlation matrices or heatmaps.

Cluster analysis: Cluster analysis groups similar data points into clusters based on their features. It helps identify inherent patterns or structures in the data.

Time series analysis: Time series analysis is employed when dealing with data collected over time. It helps detect trends, seasonality, and other temporal patterns.

Handling Missing Data and Outliers

Handling missing data and outliers is a crucial step in data analysis. Techniques like imputation, deletion, or advanced expectation-maximisation (EM) can address missing values.

At the same time, outliers must be identified and treated separately to ensure unbiased analysis and accurate conclusions.

Data Preprocessing for EDA

Data Preprocessing is crucial before performing EDA or building machine learning models. It involves preparing the data in a suitable format to ensure accurate and reliable analysis.

Data Cleaning and Data Transformation

In data cleaning and transformation, missing data, duplicate records, and inconsistencies are addressed by removing or imputing missing values, eliminating duplicates, and correcting errors.

Data transformation involves normalising numerical variables, encoding categorical variables, and applying mathematical changes to deal with skewed data distributions.

Data Imputation Techniques

Data imputation techniques involve filling in missing values using mean, median, or mode imputation, regression imputation, K-nearest neighbours (KNN) imputation, and multiple imputations, which helps to address the issue of missing data in the dataset.

Handling Categorical Data

In data science training, categorical data, representing non-numeric variables with discrete values like gender, colour, or country, undergoes conversion to numerical format for EDA or machine learning.

Techniques include label encoding (assigning unique numerical labels to categories) and one-hot encoding (creating binary columns indicating the presence or absence of categories).

Feature Scaling and Normalisation

In data preprocessing, feature scaling involves:

- Scaling numerical features to a similar range.

- Preventing any one feature from dominating the analysis or model training.

- Using techniques like Min-Max scaling and Z-score normalisation.

On the other hand, feature normalisation involves normalising data to have a mean of 0 and a standard deviation of 1, which is particularly useful for algorithms relying on distance calculations like k-means clustering or gradient-based optimisation algorithms.

Data Visualisation for EDA

Univariate and Multivariate Visualisation

Univariate analysis involves examining individual variables in isolation, dealing with one variable at a time. It aims to describe the data and identify patterns but does not explore causal relationships.

In contrast, multivariate analysis analyses datasets with three or more variables, considering interactions and associations between variables to understand collective contributions to data patterns and trends, offering a more comprehensive understanding of the data.

Histograms and Box Plots

Histograms visually summarise the distribution of a univariate dataset by representing central tendency, dispersion, skewness, outliers, and multiple modes. They offer valuable insights into the data’s underlying distribution and can be validated using probability plots or goodness-of-fit tests.

Box plots are potent tools in EDA for presenting location and variation information and detecting differences in location and spread between data groups. They efficiently summarise large datasets, making complex data more accessible for interpretation and comparison.

Scatter Plots and Correlation Heatmaps

Scatter plots show relationships between two variables, while correlation heatmaps display the correlation matrix of multiple variables in a dataset, offering insights into their associations. Both are crucial for EDA.

Pair Plots and Parallel Coordinates

Pair plots provide a comprehensive view of variable distributions and interactions between two variables, aiding trend detection for further investigation.

Parallel coordinate plots are ideal for analysing datasets with multiple numerical variables. They compare samples or observations across these variables by representing each feature on individual equally spaced and parallel axes.

This method efficiently highlights relationships and patterns within multivariate numerical datasets.

Interactive Visualisations (e.g., Plotly, Bokeh)

Plotly, leveraging JavaScript in the background excels in creating interactive plots with zooming, hover-based data display, and more. Additional advantages include:

- Its hover tool capabilities for detecting outliers in large datasets.

- Visually appealing plots for broad audience appeal.

- Endless customisation options for meaningful visualisations.

On the other hand, Bokeh, a Python library, focuses on human-readable and fast visual presentations within web browsers. It offers web-based interactivity, empowering users to dynamically explore and analyse data in web environments.

Descriptive Statistics for EDA

Descriptive statistics are essential tools in EDA as they concisely summarise the dataset’s characteristics.

Measures of Central Tendency (Mean, Median, Mode)

- Mean, representing the arithmetic average is the central value around which data points cluster in the dataset.

- Median, the middle value in ascending or descending order, is less influenced by extreme values than the mean.

- Mode, the most frequently occurring value, can be unimodal (one mode) or multimodal (multiple modes) in a dataset.

Measures of Variability (Variance, Standard Deviation, Range)

Measures of Variability include:

- Variance: It quantifies the spread or dispersion of data points from the mean.

- Standard Deviation: The square root of variance provides a more interpretable measure of data spread.

- Range: It calculates the difference between the maximum and minimum values, representing the data’s spread.

Skewness and Kurtosis:

Skewness measures data distribution’s asymmetry, with positive skewness indicating a right-tail longer and negative skewness a left-tail longer.

Kurtosis quantifies peakedness; high kurtosis means a more peaked distribution and low kurtosis suggests a flatter one.

Quantiles and Percentiles:

Quantiles and percentiles are used to divide data into equal intervals:

- Quantiles, such as quartiles (Q1, Q2 – median, and Q3), split the data into four equal parts.

- Percentiles, like the 25th percentile (P25), represent the relative standing of a value in the data, indicating below which percentage it falls.

Exploring Data Relationships

Correlation Analysis

Correlation Analysis examines the relationship between variables, showing the strength and direction of their linear association using the correlation coefficient “r” (-1 to 1). It helps understand the dependence between variables and is crucial in data exploration and hypothesis testing.

Covariance and Scatter Matrix

Covariance gauges the joint variability of two variables. Positive covariance indicates that both variables change in the same direction, while negative covariance suggests an inverse relationship.

The scatter matrix (scatter plot matrix) visually depicts the covariance between multiple variables by presenting scatter plots between all variable pairs in the dataset, facilitating pattern and relationship identification.

Categorical Data Analysis (Frequency Tables, Cross-Tabulations)

Categorical data analysis explores the distribution and connections between categorical variables. Frequency tables reveal category counts or percentages in each variable.

Cross-tabulations, or contingency tables, display the joint distribution of two categorical variables, enabling the investigation of associations between them.

Bivariate and Multivariate Analysis

Data science training covers bivariate analysis, examining the relationship between two variables, which can involve one categorical and one continuous variable or two continuous variables.

Additionally, the multivariate analysis extends the exploration to multiple variables simultaneously, utilising methods like PCA, factor analysis, and cluster analysis to identify patterns and groupings among the variables.

Data Distribution and Probability Distributions

Normal Distribution

The normal distribution is a widely used probability distribution known for its bell-shaped curve, with the mean (μ) and standard deviation (σ) defining its center and spread. It is prevalent in many fields due to its association with various natural phenomena and random variables, making it essential for statistical tests and modelling techniques.

Uniform Distribution

In a uniform distribution, all values in the dataset have an equal probability of occurrence, characterised by a constant probability density function across the entire distribution range.

It is commonly used in scenarios where each outcome has the same likelihood of happening, like rolling a fair die or selecting a random number from a range.

Exponential Distribution

The exponential distribution models the time between events in a Poisson process, with a decreasing probability density function characterised by a rate parameter λ (lambda), commonly used in survival analysis and reliability studies.

Kernel Density Estimation (KDE)

KDE is a non-parametric technique that estimates the probability density function of a continuous random variable by placing kernels (often Gaussian) at each data point and summing them up to create a smooth estimate, making it useful for unknown or complex data distributions.

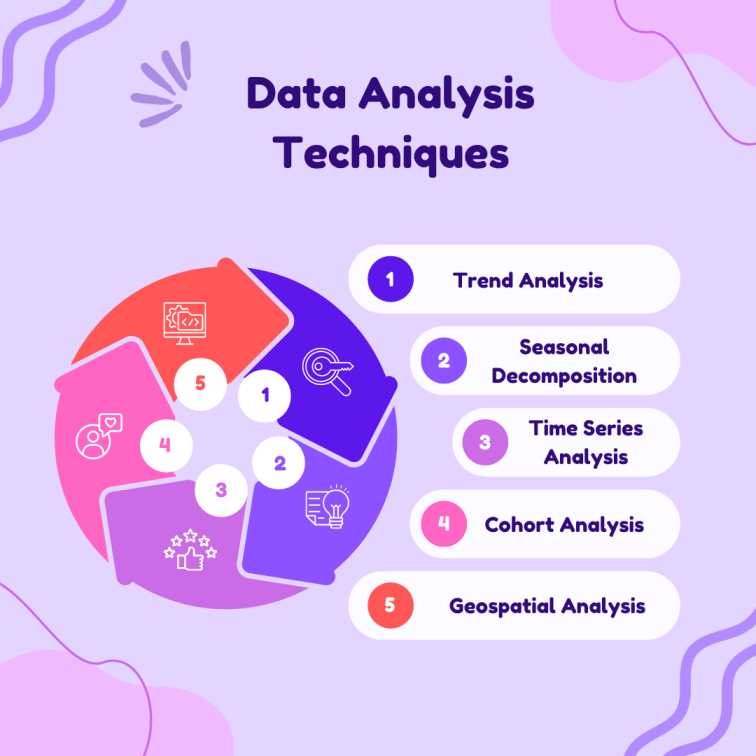

Data Analysis Techniques

Trend Analysis

Trend analysis explores data over time, revealing patterns, tendencies, or changes in a specific direction. It offers insights into long-term growth or decline, aids in predicting future values, and supports strategic decision-making based on historical data patterns.

Seasonal Decomposition

Seasonal decomposition is a method to separate time series into seasonal, trend, and residual components, which helps identify seasonal patterns, isolate fluctuations, and forecast future seasonal behaviour.

Time Series Analysis

Time series analysis examines data points over time, revealing variable changes, interdependencies, and valuable insights for decision-making. Time series forecasting predicts future trends, like seasonality effects on sales, like swimwear in summer, and umbrellas/raincoats in monsoon), aiding in production planning and marketing strategies.

If you are interested in mastering time series analysis and its applications in data science and business, enrolling in a data analyst course can equip you with the necessary skills and knowledge to effectively leverage this method and drive data-driven decisions.

Cohort Analysis

Cohort analysis utilises historical data to examine and compare specific user segments, providing valuable insights into consumer needs and broader target groups. In marketing, it helps understand campaign impact on different customer groups, allowing optimisation based on content that drives sign-ups, repurchases, or engagement.

Geospatial Analysis

Geospatial analysis examines data linked to geographic locations, revealing spatial relationships, patterns, and trends. It is valuable in urban planning, environmental science, logistics, marketing, and agriculture, enabling location-specific decisions and resource optimisation.

Interactive EDA Tools

Jupyter Notebooks for Data Exploration

Jupyter Notebooks offer an interactive data exploration and analysis environment, enabling users to create and execute code cells, add explanatory text, and visualise data in a single executable document.

Using this versatile platform, data scientists and analysts can efficiently interact with data, test hypotheses, and share their findings.

Data Visualisation Libraries (e.g., Matplotlib, Seaborn)

Matplotlib and Seaborn are Python libraries offering versatile plotting options, from basic line charts to advanced 3D visualisations and heatmaps, with static and interactive capabilities. Users can utilise zooming, panning, and hovering to explore data points in detail.

Tableau and Power BI for Interactive Dashboards

Tableau and Microsoft Power BI are robust business intelligence tools that facilitate the creation of interactive dashboards and reports, supporting various data connectors for seamless access to diverse data sources and enabling real-time data analysis.

With dynamic filters, drill-down capabilities, and data highlighting, users can explore insightful data using these tools.

Consider enrolling in a business analytics course to improve your proficiency in utilising these powerful tools effectively.

D3.js for Custom Visualisations

D3.js (Data-Driven Documents) is a JavaScript library that allows developers to create highly customisable and interactive data visualisations. Using low-level building blocks enables the design of complex and unique visualisations beyond standard charting libraries.

EDA Best Practices

Defining EDA Objectives and Research Questions

When conducting exploratory data analysis (EDA), it is essential to clearly define your objectives and the research questions you aim to address. Understanding the business problem or context for the analysis is crucial to guide your exploration effectively.

Focus on relevant aspects of the data that align with your objectives and questions to gain meaningful insights.

Effective Data Visualisation Strategies

- Use appropriate and effective data visualisation techniques to explore the data visually.

- Select relevant charts, graphs, and plots based on the data type and the relationships under investigation.

- Prioritise clarity, conciseness, and aesthetics to facilitate straightforward interpretation of visualisations.

Interpreting and Communicating EDA Results

- Acquire an in-depth understanding of data patterns and insights discovered during EDA.

- Effectively communicate findings using non-technical language, catering to technical and non-technical stakeholders.

- Use visualisations, summaries, and storytelling techniques to present EDA results in a compelling and accessible manner.

Collaborative EDA in Team Environments

- Foster a collaborative environment that welcomes team members from diverse backgrounds and expertise to contribute to the EDA process.

- Encourage open discussions and knowledge sharing to gain valuable insights from different perspectives.

- Utilise version control and collaborative platforms to ensure seamless teamwork and efficient data sharing.

Real-World EDA Examples and Case Studies

Exploratory Data Analysis in Various Industries

EDA has proven highly beneficial in diverse industries, such as healthcare, finance, and marketing. EDA analyses patient data in the healthcare sector to detect disease trends and evaluate treatment outcomes.

For finance, EDA aids in comprehending market trends, assessing risks, and formulating investment strategies.

In marketing, EDA examines customer behaviour, evaluates campaign performance, and performs market segmentation.

Impact of EDA on Business Insights and Decision Making

EDA impacts business insights and decision-making by uncovering patterns, trends, and relationships in data. It validates data, supports hypothesis testing, and enhances visualisation for better understanding and real-time decision-making. EDA enables data-driven strategies and improved performance.

EDA Challenges and Solutions

EDA challenges include:

- Dealing with missing data.

- Handling outliers.

- Processing large datasets.

- Exploring complex relationships.

- Ensuring data quality.

- Avoiding interpretation bias.

- Managing time and resource constraints.

- Choosing appropriate visualisation methods.

- Leveraging domain knowledge for meaningful analysis.

Solutions involve data cleaning, imputation, visualisation techniques, statistical analysis, and iterative exploration.

Conclusion

Exploratory Data Analysis (EDA) is a crucial technique for data scientists and analysts, enabling valuable insights across various industries like healthcare, finance, and marketing. Professionals can uncover patterns, trends, and relationships through EDA, empowering data-driven decision-making and strategic planning.

Imarticus Learning’s Postgraduate Programme in Data Science and Analytics offers the ideal opportunity for those aspiring to excel in data science and analytics.

This comprehensive program covers essential topics, including EDA, machine learning, and advanced data visualisation, while providing hands-on experience with data analytics certification courses. The emphasis on placements ensures outstanding career prospects in the data science field.

Visit Imarticus Learning today to learn more about our top-rated data science course in India, to propel your career and thrive in the data-driven world.